The language found in research papers isn’t more carefully crafted language after peer review but it does include more on the potential limitations of the work, a new study has found.

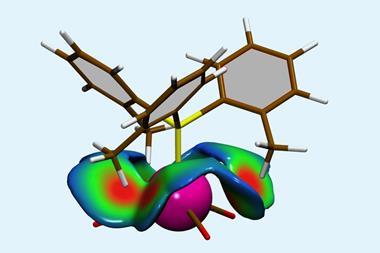

The study compared the language of 446 randomised control trial reports, published in BioMed Central and BMJ Open bioscience journals in 2015, before and after they went through peer review. Using two software tools they previously created, the authors detected the language used to describe limitations and linguistic hedges.

They found that the number of sentences dedicated to mentioning limitations of studies increased by 56% – an additional 1.39 sentences per paper – after peer review. But peer review didn’t result in authors hedging their claims to make their statements less speculative or bold, the study found.

Before peer review, roughly 45% of manuscripts didn’t mention any limitations, the study found, but this figure dropped to around a third after the reviewing process. The authors write that although their work suggests that peer review can have an impact on papers that acknowledge few or no limitations, it doesn’t support the theory that the review process leads to authors becoming more conservative about the language they use.

Gerben ter Riet at the University of Amsterdam in the Netherlands who led the study, did not reply to requests for an interview.

Paulina Stehlik, who studies clinical research methods and evidence-based practice at Bond University in Australia, says the language of research papers is worth studying. ‘Journalists will often pick up on the language used in presenting study claims and reflect that in their reporting of it, so ensuring that claims made by studies are not overstated is of public interest,’ she says. Recent research has suggested that hype in science or health news often originates from university press releases, rather than from news stories.

‘Personally, I was very surprised to learn that in the examined fields almost 50% of the submitted manuscripts did not mention any limitations,’ says Sven Hug, who studies research evaluation and bibliometrics at the University of Zurich in Switzerland. ‘It’s very valuable that the authors uncovered the extent of this detrimental research practice.’

One limitation of the study itself, Hug notes, is that it can’t definitely say if the limitation statements were added to satisfy peer reviewers. Another is that the algorithm the study uses merely picks up hedge words, but can’t understand the context they are used in, notes Tony Ross-Hellauer, an information scientist at the Graz University of Technology in Austria. This means that hedge words that are used in sentences that don’t draw claims about the study may also be counted, he adds.

‘In the bigger scheme of things, in terms of research waste and the reproducibility crisis, while exaggeration of study findings is definitely part of the problem, it is only one aspect of it,’ Stehlik says. ‘For example an article could have appropriately worded their findings, but the findings themselves might might be false due to poor study design or analysis.’

References

K Keserlioglu, H Kilicoglu and G ter Riet, Res. Integr. Peer Rev., 2019, 4, 19 (DOI: 10.1186/s41073-019-0078-2)

No comments yet