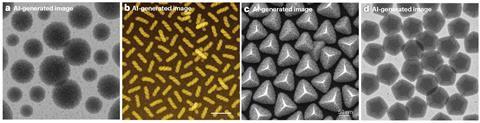

A new nanoscience paper describes an exciting new material that closely resembles a much-loved puffed corn snack. A scatter of twisted tubes, dubbed nano-cheetos, are shown in a clear electron microscopy image. The only problem: the material isn’t real. The image was made with ChatGPT, by a team of materials scientists who warn that such AI-generated images could make scientific fraud near-undetectable.

Manipulating figures with tools like Photoshop tends to leave telltale signs such as random straight lines and duplications. ‘With AI-generated images, those hallmarks are gone,’ says image integrity consultant Mike Rossner.

Some attempts at sneaking AI-generated images into papers – for example an illustration of a rat with giant testicles – are almost laughably easy to spot. That wasn’t the case for the nanomaterials images that PhD researcher Nadiia Davydiuk created to show group leader Quinn Besford from the Leibniz Institute of Polymer Research Dresden, Germany, what was possible with AI.

‘It shocked me,’ Besford recalls. Neither he nor his colleague Matthew Faria, from the University of Melbourne, Australia, could tell the difference. ‘The people that are really working on [image integrity] are very aware of AI and are very worried about it,’ says image integrity analyst Jana Christopher.

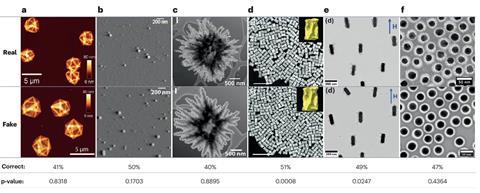

Besford, Faria and their teams surveyed 250 scientists, asking them to distinguish real from AI-generated microscopy images. The responses confirmed that this was essentially impossible, even for experts. The team is now calling for urgent action to avoid the literature becoming swamped with fake figures.

They suggest that publications should always include raw files, those created by the instrument. These are much harder to fake than images in a pdf. ‘I think the solution needs to go much deeper, to institutional repositories of data’, where raw data is kept safe from being modified or deleted, Rossner notes.

There should also be less pressure from journals and reviewers to create ‘perfect’ images. ‘The community needs to get back to appreciating dirt,’ says Besford. ‘If you’re making conclusions about chains of nanoparticles, and there’s a couple of free nanoparticles, that doesn’t mean your conclusions are invalid.’

Replication studies, published alongside high-impact papers, could weed out not only misconduct but also uncover genuine mistakes, Faria points out. However, funding replication studies is notoriously difficult. Rossner says that near-guaranteed publication could be incentive enough, although he concedes that this might not work for less high-profile journals.

According to Rossner, automated screening with AI image detectors such as Proofig AI or Imagetwin might be the only way to stay on top of the problem. Both programs are used by publishing giants Springer Nature, Elsevier, Taylor & Francis as well as by Royal Society of Chemistry journals.

When Rossner tried Proofig on Besford and Faria’s article, it didn’t catch the AI-generated photos. In the hands of Proofig’s founder and CEO Dror Kolodkin-Gal, the program did flag one of the figures. He points out that the program is tuned for a very low false-positive rate to avoid overwhelming editorial teams and to prevent unfair accusations against authors. ‘The trade-off is that some AI-generated images may slip through on the first pass,’ Kolodkin-Gal says.

‘There will of course always be individuals who cut corners and cheat a bit, or a lot,’ Christopher says. According to Christopher, academia’s publish or perish culture ‘makes the literature extremely vulnerable to fraud’ – whether that’s in the form of AI-generated images or entirely made-up papers produced by paper mills.

‘We need some large-scale solutions now,’ she says. ‘We know that neither the correction system nor the peer review system are geared up for the scale of the problem.’

References

N Davydiuk et al, Nat. Nanotechnol., 2025, DOI: 10.1038/s41565-025-02009-9

No comments yet