Machine learning can complement and reinforce human intuition and experience

Artificial intelligence (AI) has been increasingly employed to support drug discovery over the last decade. Pioneering start-ups have worked to convince traditional firms that AI and machine learning can save time and money, while amplifying novelty.

An estimated 86% of drug development programmes ended in failure between 2000 and 2015. Meanwhile estimates of the cost of bringing a new drug to market range from hundreds of millions to several billion dollars. ‘We need to change the economics of the pharmaceutical industry. We need to change the way drugs are invented,’ says Andrew Hopkins, founder of Exscientia.

When Exscientia began around 10 years ago, recalls Hopkins, ‘there was very strong resistance to the concept that algorithms and machine learning could do something as creative as drug discovery’. But attitudes have shifted. Big pharma is betting on AI, and partnerships are proliferating between established drug companies and newer start-ups.

Sanofi partnered with Exscientia in 2022, contributing $100 million (£80 million) in cash to help develop 15 novel small molecule candidates in oncology and immunology. If the project hits its development milestones, Sanofi could pay up to $5.2 billion. AI biotech Recursion in Utah, US, signed a deal with Roche and Genentech to uncover molecules using AI for neurological and cancer indications, netting $150 million up front, with up to $300 million more on offer in performance milestone payments. And last year, AstraZeneca expanded its drug discovery collaboration with BenevolentAI to encompass lupus and heart failure.

The secret is to bring together human intuition and experience and the data

In January 2023, Bayer and Google Cloud announced a joint effort to accelerate Bayer’s quantum chemistry calculations to drive early drug discovery via machine learning. This should boost in silico modelling of biological and chemical systems, and thereby help identify drug candidates. However, in late January, Google’s parent company Alphabet confirmed it would cut 12,000 jobs worldwide, including many in its teams supporting drug discovery. Around the same time, BioNTech acquired AI company InstaDeep for £362 million to help develop immunotherapies and vaccines.

‘The ability to map novel disease pathways and identify new targets has huge potential,’ says Miraz Rahman, a medicinal chemist at King’s College London, UK. ‘That’s why we are seeing large pharma companies partnering with AI-based companies.’ This is underpinned by a deluge of data, which can be mined by AI, and increasingly automated wet lab experiments.

Seeing past messy biology

Biology is complicated, while drug discovery is powered by information. Recursion was set up to draw up better maps of human disease biology to allow scientists to discover better medicines. ‘We’ve got a real lack of understanding of fundamental human biology – it’s indescribably complex,’ says Joseph Carpenter, who heads up medicinal chemistry at Recursion. At the same time, the volume of new scientific publications as well as cell, tissue and patient data relevant to disease targeting is huge and growing rapidly. AI can spot patterns in troves of data that are impossible for a human to recognise.

Evotec, headquartered in Hamburg, Germany, views AI as a tool to bring biologists and chemists together, digesting the flood of scientific articles and insights – using natural language processing – and turning it into a knowledge graph. ‘You can bring the data together, in one place, where you can have a conversation between different types of scientists,’ says David Pardoe, head of global molecular architects at Evotec. ‘The secret is to bring together human intuition and experience and the data.’

While AI and machine learning must convert biology into quantifiable values, humans are still an essential element in the equation. ‘We want to encode drug discovery,’ says Hopkins. ‘But we need to understand human expertise and tacit knowledge and creativity, and ask how that becomes something that is codable, repeatable and scalable.’ This is a reason why the company calls its AI platform Centaur, after the human-horse hybrid creature from Greek myth. Molecules created using Centaur are now in clinical trials to treat cancer, obsessive-compulsive disorder and Alzheimer’s disease psychosis – the latter two were both developed with Japanese firm Sumitomo Dainippon Pharma.

The field has benefited from technological advances. ‘The first thing that has changed is computing power, and also the vast amount of data that is available,’ says Rahman. Predictive models work better when there are large training sets, and some AI biotechs now lean on the fastest supercomputers in the world. Rahman once took six months to run a molecular dynamics simulation of a molecule interacting with a protein target; but better resolution is now possible in days or weeks. ‘That means that you are getting much more realistic data on how compounds interact with the biological system,’ Rahman explains. Improved insights into molecular docking, molecular dynamics and pharmacokinetic properties of molecules are crucial in the preclinical phase if they are not to fail, expensively, further down the line.

Plenty to work with

Using AI, Insilico has identified a new target for idiopathic pulmonary fibrosis, a chronic lung disease, and then designed a small molecule drug candidate. This was only possible by tapping into huge amounts of information. ‘For our AI platform, we have 10 million data samples, 40 million publications and close to two million compounds and also biological data, including structure, activity, safety and druggability,’ says Feng Ren, co-chief executive of Insilico. Carpenter notes that Recursion has gathered 21 petabytes of data.

Exploiting colossal datasets, machine learning and AI aim to deliver fewer yet superior molecules. This contrasts to the traditional combinatorial approach adopted in the 1990s, which synthesised millions of compounds, of which a tiny proportion progressed beyond the lab. Pardoe says the focus is increasingly creating molecules that are information-rich. ‘We don’t want to make thousands of molecules anymore,’ he says. ‘We want molecules that help you understand what a [drug] binding site looks like, or where you’re going to interact with a binding site.’

We don’t want to make thousands of molecules anymore. We want molecules that help us understand the problems

Exscientia has reported a novel CDK7 inhibitor, with potential for treating cancer. Just 136 novel compounds were made and tested – in less than a year – to find that inhibitor. Meanwhile, its new immuno-oncology candidate for patients with solid tumours was ‘generated by our algorithms in a rapid discovery process, with around 176 novel compounds made and tested to find that compound’, says Hopkins. He says that on average they make around 250 novel molecules going from an idea to a drug candidate, whereas the industry on average makes about 2500 to 5000. Schrödinger, described as leveraging a physics-based platform, assessed 8.2 billion compounds computationally and synthesised just 78 molecules over a 10-month period of ‘design, make, test,’ before selecting an anticancer molecule that was cleared to enter a clinical trial in patients with lymphoma.

Faster discovery is touted as a major AI upside. A 2019 paper from Insilico reported on a deep generative model for small molecules that optimises synthetic feasibility, novelty and biological activity for fibrosis and other diseases. ‘The experiments were done in 21 days, and we generated a molecule that went all the way into mice within 46 days,’ says founder Alex Zhavoronkov. Pardoe says Evotec can now move from exploring a target to possessing a preclinical development candidate in 21 months or less.

But Rahman warns that, despite gargantuan amounts of data, predictive models are always fallible. ‘You need the experimental data to validate [the models],’ he adds, ‘but what will happen in the next probably five to 10 years is that experimental datasets will increase, enrich these AI models. That will probably significantly heighten the success rate.’ Insilico is one of several AI companies investing in highly automated wet labs.

Recursion’s Carpenter agrees: ‘Having massive datasets is critical, but the data has to be reliable,’ he says. Over two million wet lab experiments a week are performed in the company’s highly automated lab in Salt Lake City, US. Predictions and calculations, synthesis and lab experiments are then brought together for drug discovery.

Access all areas

AI devotees are quick to say that their algorithms are agnostic to therapeutic area. But efforts are nevertheless skewed, particularly towards cancer. ‘That’s where the return on investment is significantly higher,’ says Rahman. He advocates ramping up the use of AI to explore antimicrobials, especially for difficult Gram-negative bacteria such as Pseudomonas aeruginosa, where any improvement in success rates would be welcome. The lab of Jim Collins at the Massachusetts Institute of Technology, US, is recruiting AI to discover new antibiotics, while Exscientia is collaborating with the Bill & Melinda Gates Foundation to develop therapeutics for viruses with pandemic potential.

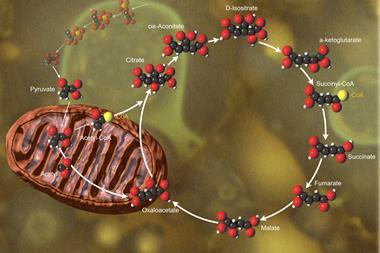

There is also interest in neurodegenerative diseases, which have been notoriously difficult to develop drugs for. ‘These are diseases where traditional approaches have not had much success, and there’s a large unmet clinical need,’ notes Rahman. AI could uncover druggable targets for Alzheimer’s disease – where there is arguably a lack of clear disease targets. BenevolentAI has run a phase 2 trial in the UK for its AI-inspired candidate for Parkinson’s disease. The company’s pipeline now includes a candidate for atopic dermatitis in phase 2. In the US, Insitro began a five-year collaboration with Bristol Myers Squibb in 2020 to work on two neurodegenerative disorders – amyotrophic lateral sclerosis and frontotemporal dementia. Insilico is also interested in central nervous system disorders, including Parkinson’s, along with fibrosis, oncology and immunology. All are related to ageing, explains Ren: ‘We want to use our algorithm to understand the biology of ageing and age-related diseases.’

Scaling complexity

Beyond looking at small molecule drugs, AI and machine learning are beginning to be directed at much more complex biological drugs. In late 2022, Exscientia began trying to design human antibodies. ‘We are looking at it as a proof of concept to use deep learning approaches to generate and screen, virtually, incredibly large libraries of virtual antibodies,’ says Hopkins. The goal ultimately is to develop antibodies against specific targets.

For protein-based therapeutics, or to investigate drug targets that aren’t easily available to study in the lab, modelling how they will fold is a crucial factor in determining their behaviour. AlphaFold, the algorithm developed by Google subsidiary DeepMind, has vastly improved predictions of 3D protein structures from amino acid sequences. And while it is unlikely to immediately revolutionise drug discovery, Pardoe predicts that AlphaFold will have a major impact.

Others, such as Generate Biomedicines in the US, are developing their own algorithms. Generate has combined computational and laboratory characterisation of known proteins, seeking statistical patterns in amino acid sequence, structure and function. Learning general principles of folding from existing structures could allow the company design entirely new protein architectures, or to make binders to perturb human proteins in just the right way to address a disease state, says John Ingraham, head of machine learning at Generate. The company is collaborating with Amgen on five clinical targets.

Another trend is for AI to be used not just in early drug discovery, but in development: for lead optimisation, predicting toxicity, pharmacokinetics, and even selecting patient subgroups in clinical trials. ‘The whole process of integrating AI is going to change the way we see drug discovery and development,’ Rahman predicts. ‘Our goal is to make drug companies more productive, with a combination of humans and machines,’ says Hopkins. ‘I feel we’ve won the argument, and we believe that all drugs in the future will be designed in this way.’

How will AI and automation change chemistry?

It’s going to change our lives. But it’s not clear in what ways

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

Currently

reading

Currently

reading

Encoding creativity in drug discovery

No comments yet