Can you trust your results like a skydiver trusts their altimeter?

As my computer buzzed with the livestream of high-altitude skydiver Felix Baumgartner standing on the edge of a stratospheric balloon preparing to free-fall, my colleague pointed at the gas chromatogram on a different part of the screen. ‘See that inflection there? That’s hydrogen. You said that’s where it comes out!’

‘Yes,’ I replied. ‘But that inflection couldn’t be a signal, nor this or this,’ I said as I circled my cursor around two similar baseline inflections. ‘With this method and the need of a reliable signal to noise, this shows nothing.’

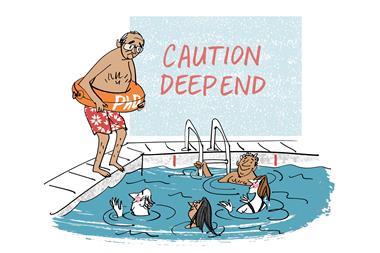

‘But there could be something there.’ My colleague stood back. ‘This reaction is very trace. Take that peak, integrate it, and get me an hourly yield. This proposal is due shortly, and this is a great confirmation!’

The integrity of your data is paramount. Decisions are based on it, money flows by it, careers, programs and reputations are made and broken by it. Much like a skydive from the stratosphere, experimental chemistry holds an allure of exploration and risk. This must be framed by method development, qualification and responsible conduct of research.

I have lost count of the times someone has shown conclusions based on choppy baselines and single trials, or has pointed at a lone feature in a plot and assigned a crucial interpretation to it. Or the times when someone comes up to me with a dataset, and asks ‘Is this good?’

I can never answer that question with a simple ‘yes’ or ‘no’. ‘Good’ data could justify a pre-existing belief or bias. ‘Bad’ data could be influenced by poor method development or interpretation. I treat datasets like a skydiver’s altimeter: if there are any questions on the repeatability or reproducibility of the gauge’s numbers, I can’t trust it or the conclusions I draw from it.

When someone asks, ‘Is this good?’ I ask them in return: ‘Is this data clean?’

Clean experimental data is statistically relevant, exhibits the qualitative characteristics of the technique, and is not necessarily the first thing spat out of the spectrometer. When analysing your samples or collaborating with colleagues, ensuring the integrity of your data should be paramount. The world is littered with examples of the consequences of data misinterpretation, from the resurgence of diseases to the fateful launch of the shuttle Challenger. With the skydiver in mind, your altimeter reading needs to be accurate and steady when you are ready to jump on an interpretation.

As a chemist, taking the time to generate and reliably interpret clean data requires a methodological approach. It may feel slower and more difficult – because it is. It is difficult to do in high-stress, deadline-driven situations. I am proud to say some of my colleagues have run with the challenge.

A side effect of the chase of clean data is that your experimentation becomes more careful. You watch your hotplates carefully. Cooling jackets are built and overbuilt. Electrical measurements are shielded and carefully hooked up. Your method development takes more time, but the deviations drop. Do it right, versus right now. Then do it right again. And again.

If an altimeter on Baumgartner’s wrist was misbehaving, there would be a debate over whether the jump attempt should proceed. While this is extreme, the ramifications of data collection, analysis and interpretation can steer one’s course of research. I battled with attenuated total reflectance Fourier transform infrared spectrometry for six months working on solid oxide samples. By applying a higher standard of sample preparation I was able to greatly improve my analysis of absorbates and residual organics. My catalysis data became more repeatable and reproducible. Those six months were worth it – but not easy.

I watched Baumgartner’s jump thinking about the team of people who were glued to their readouts watching for the indication of something going wrong over New Mexico. I also had to think about what to do with my data. My colleague was looking at me expectantly.

In the end, that proposal did not include my data. The baseline inflection that day was still a bit high – my detector was not optimally functioning with the method. But once I started chasing low baseline deviations, sensitivities improved. Trends emerged and became repeatable. And the data became cleaner. Another proposal was submitted later with careful, integrous research.

The challenge of clean data is one that carried me though my PhD’s ups and downs and continues to be a consideration in every paper I read, every process I observe and every team member I work with. The question of ‘Is it clean?’ has brought me through months of ‘failed’ experiments and allowed me to learn from incredible people.

And while the likelihood of me jumping from a hot-air balloon is relatively low, if I find myself in that scenario, I will be checking my altimeter quite a bit.

No comments yet