High-throughput experimentation (HTE) has gone from a scientific curiosity to a widely used tool in modern chemistry workflows. We caught up with Professor Matthew Gaunt from the University of Cambridge to hear his thoughts on the impact that HTE has had on medicinal chemistry and the role of automation and computational models in discovering new chemical space.

‘High-throughput experimentation enables you to conduct far more reactions than you would be able to do manually,’ explains Professor Gaunt. ‘You can ask significantly more questions and generate much more data from a single HTE experiment. You can interrogate individual reactions in a much more holistic sense across the whole remit of the reaction development process.’

The applications are diverse, ranging from reaction discovery and optimisation to creating compound libraries and conducting direct-to-biology assays. This versatility has made HTE an essential part of the Gaunt lab’s research at the University of Cambridge, UK, with these efficient parallel methods taking their place alongside traditional one-at-time approaches.

‘It has enabled us to make huge leaps forward in terms of discovery and optimisation,’ Gaunt explains. ‘For example, by running a single high-throughput experiment, we can better assess the reaction scope for an experiment. It’s incredibly useful as it informs us on what works well and what doesn’t, and it allows us to feed that information back into our optimisation process.’

Strategic use of automation

‘The way we use automation is an isolated robot to automate a particular task but it’s not automating the workflow, simply automating the dosing of the reagent or sampling for analysis for example,’ Gaunt explains. ‘I think I would probably refer to this as semi-automated.’

The process of setting up a reaction requires a non-trivial amount of work that comes with practical challenges. Weighing out materials can be performed by solid handling robots, but they often aren’t as reliable as liquid handling platforms. However, this requires materials to be in liquid form, which may require changing solvents. The result is that there is still a need for manual effort in the lab.

The Gaunt lab therefore focuses automation efforts where they deliver the most value, by streamlining tasks that are identical across multiple reactions. ‘We find it useful to perform tasks that are the same across a large number of reactions. For example, if we have a reagent which is common to every single well in a plate, then we can dose that in a single go.’

It really does allow us to do chemistry that you wouldn’t have been able to do with a classical approach.

Professor Matthew Gaunt, University of Cambridge, UK

Precise control at the nanoliter scale

One of the enablers of HTE in Gaunt’s lab is SPT Labtech’s mosquito® liquid handler. ‘We use mosquito for dosing different reagents into microtiter reaction,’ notes Gaunt. ‘It’s particularly valuable for 384 and 1536-well plates where precise handling of small volumes is crucial.’

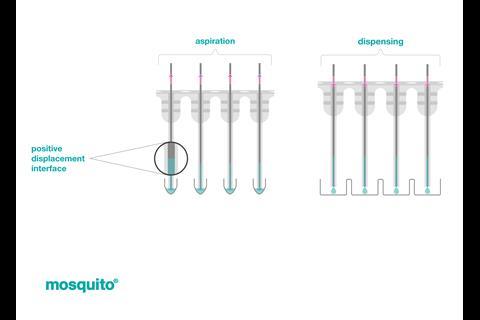

Mosquito’s ability to handle nanoliter volumes with accuracy is thanks to its use of true positive displacement technology. Rather than relying on an air cushion, each of mosquito’s micropipettes is loaded with an individual piston, that eliminates any errors from changes in air pressure.

The platform’s versatility extends beyond reaction setup. ‘We also use mosquito for analysis, which is essentially the most important part of this whole workflow.’ Gaunt continues. ‘We use mosquito to dilute samples from our reaction plates into an analysis plate.’

There are of course practical aspects of implementation. ‘You need to spend some time programming your source plates depending on the operation of your instrument. With mosquito, you dispense in rows of 16, which means that you need to plan beforehand how you’re going to set up your reactions. But that’s easy to do using various programs and some common sense.’

Applications in complex chemistry

The Gaunt lab’s research focuses on developing new reactions and catalytic activation modes. Rather than simply performing other people’s chemistry in high throughput, the group aim to invent and develop new things.

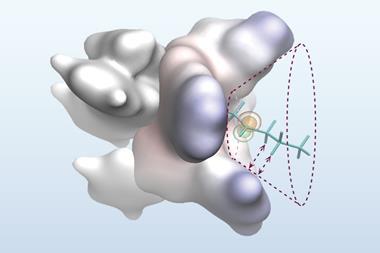

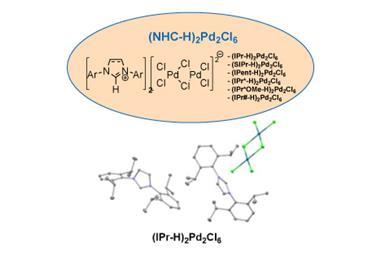

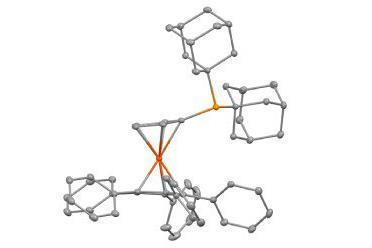

Their work spans several challenging areas, including photoredox catalysis, C-H activation and biomacromolecule chemistry (oligonucleotides, RNA, DNA, peptides).

‘Catalysis forms a key part of most of the work that we do,’ Gaunt notes. ‘The way that we use HTE is often geared towards developing new catalytic reactions where it gives us an opportunity to assess in parallel all of the reaction variables. It means that we investigate things like catalysts, ligands, bases, additives or different substrates all at once.’

The ability to work with minimal amounts of material has been transformative in other areas of research, most notably in in developing new chemistry on nucleic acids. ‘You often only have micrograms of material, and traditionally, you can’t do very much with that in terms of exploring new chemistry,’ Gaunt explains. ‘Now we can work in a way which is much more akin to how molecular biologists might work, doing thousands of experiments with tiny amounts of material. It really does allow us to do chemistry that you wouldn’t have been able to do with a classical approach.’

The importance of quality data

Accurate and quantitative data is essential in HTE. ‘From the very beginning, we’ve pinned our loyalty to accurate, quantitative analysis,’ he states. ‘The data that most people generate using HTE is qualitative, which is useful if you simply want a yes or no answer but doesn’t give you the granular detail and accuracy that you would need for machine learning applications.’

We find it very useful to work in 1536-well plates but only run 96 reactions at once, because it gives us the scale of reaction we need

Professor Matthew Gaunt

His group uses multiple analytical techniques to deliver high-quality, quantitative data. ‘We use UPLC-MS a lot. The challenge is in creating the calibration assays that give you the quantitative readout. We also use a high-throughput NMR. That’s much easier way to get accurate data because you can use internal standards which you can then directly integrate.’

Scale and efficiency considerations

The chosen plate format ultimately comes down to practical considerations for each lab. ‘For the data we want, it’s actually quite hard to think of more than 384 reactions to run at once. This has been a common thought around the 1536-well platform, thinking of that many valid experiments is perhaps a lot more challenging than people first thought.’

However, the flexibility of different plate formats offers unexpected advantages. ‘We find it very useful to work in 1536-well plates but only run 96 reactions at once, because it gives us the scale of reaction we need. We don’t necessarily need to do thousands of reactions, but if we just run the number of experiments we want to do in a part of the plate, that’s quite powerful.’

Looking to the future

As the field evolves, there are plenty of opportunities for further innovations, particularly in the integration of AI and machine learning. However, success in that area will ultimately depend on the quality and quantity of experimental data.

‘It’s really important that chemists start to appreciate the importance of data science and how to generate good data,’ Gaunt concludes.

The combination of automated liquid handling platforms like mosquito with precise analytical methods is helping to bridge this gap, enabling researchers to generate the high-quality data needed to advance both chemical discovery and machine learning applications in chemistry.

Advancing medicinal chemistry with high-throughput low-volume liquid handling

In this webinar, explore how automation—particularly low-volume liquid handling—drives throughput in medicinal chemistry by increasing the number of data points generated per nanomolar-scale experiment. Plus, learn how positive displacement technology overcomes challenges in reproducibility with precise handling of difficult-to-manage liquids at ultra-low volumes.

No comments yet