Machine learning could offer whole new perspectives on our chemical world

Are molecules more than the sum of their parts? That depends on what you take as the parts. Given that strychnine contains the same atoms as proteins (C, H, N, O), there doesn’t seem to be much insight into molecular properties afforded by pulling such molecules into their component atoms. On the other hand, brucine – also found in the Strychnos nux-vomica tree and another nasty toxin – suggests that the alkaloid framework common to both molecules is generically bad news.

Physiological effects can be notoriously capricious – structurally similar molecules (such as two enantiomers) can have very different effects, while dissimilar molecules can produce near-identical results. But what about an intrinsic molecular property, such as total energy? Might we expect that to yield to some kind of dissection?

A preprint by Bing Huang and Anatole von Lilienfeld at the University of Basel, Switzerland, suggests that it does.1 The two researchers have shown that the energies of molecules of more or less arbitrary size and structure can be rather accurately predicted by a machine-learning algorithm that is ‘trained’ on a relatively small set of related fragments. This contrasts with previous efforts to use machine learning (ML) for calculating chemical properties, where the training sets needed become impractically large as the size and complexity of the target increases.2,3

Building blocks

This reduction in the scale of the problem is achieved by applying some logic. A typical ML approach would be to throw a vast training set at the algorithm, with some components not so closely related to the target. Instead, Huang and von Lilienfeld devised a scheme for identifying only those molecular frameworks that represent chemically meaningful fragments of the target: ‘core’ groups, ranging in size from one atom to almost the target itself, in related environments that accurately reflect the local chemistry.

There are far fewer of these fragments than the total number of possible pieces one might include in a training group, but they are adequate for enabling the algorithm to converge on an accurate calculation of the total energy. For a test case, 2-(furan-2-yl)propanol (C7H10O2), just 34 of these fragments was sufficient to produce a result within 1.5 kcal/mol of the true figure. A conventional ML approach might need tens of thousands. The new algorithm can also predict some other properties, such as polarisability.

The fragments are the building blocks of many similar molecules. Compounds somewhat related to this test case, for example, may be broken down into similar sets that have a strong degree of overlap. While there is no obvious limit to the number of meaningful fragments in chemical space, they’re a much reduced subset of all possible pieces. And it doesn’t just work for organic molecules: Huang and von Lilienfeld show that their approach works well for non-covalent structures, such as water clusters or the hydrogen-bonded Watson–Crick base pairs of DNA, and for extended solid-state structures such as boron nitride sheets.

Structuring chemical space

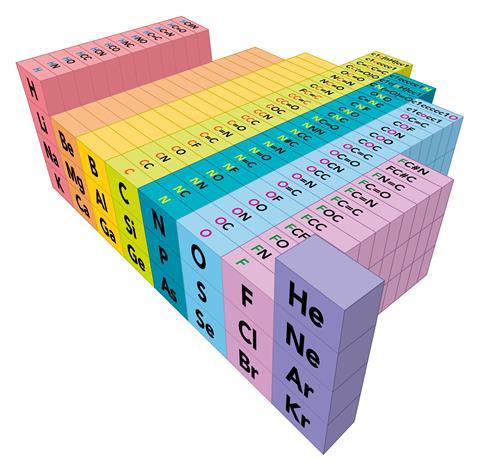

The researchers suggest that this approach can be considered an extension of the periodic table into a third dimension, which enumerates all distinct chemical environments for each element. Oxygen, say, is located in OC, ON, OO, O=N, OC=C and so on, which the researchers dub ‘am-ons’: atoms in a particular molecular environment. Von Lilienfeld says that there is a way of doing this ordering, involving integrals over neighbouring-atom distances, that provides am-ons with unique and non-arbitrary locations, even if it doesn’t avoid redundancies due to permutations of the atoms. This principle, he says, might provide a well-defined and even somewhat natural structuring of chemical space, of which the periodic table then becomes just the ‘generative surface’.

The notion that computationally challenging problems can be solved by getting smarter is also demonstrated in another paper that combines physics-based theory with chemical intuition.4 Andrei Bernevig of Princeton University, US, and his coworkers have united the chemist’s real-space picture with the physicist’s momentum-space picture of band structure of complex solid-state compounds. In doing so, they have found a shortcut that, in particular, identifies candidate compounds with electronic structures dominated by topological factors responsible for the unusual properties of ‘quantum materials’ like topological insulators. As with Huang and von Lilienfeld’s enumeration of chemical fragments, this approach reduces the redundancies of the search for a solution, taking advantages of the symmetries inherent in the system.

The work has been celebrated as a case of ‘chemistry and physics happily wed’.5 That’s something we might all gladly see – but perhaps more generally it’s a case of responding to complex problems not just with more resources but with canny thinking.

References

1 B Huang and OA von Lilienfeld, 2017, arxiv.org/abs/1707.04146

2 FA Faber et al, Phys. Rev. Lett., 2016, 117, 135502 (DOI: https://doi.org/10.1103/PhysRevLett.117.135502)

3 P Raccuglia et al, Nature, 2016, 533, 73 (DOI: 10.1038/nature17439)

4 B Bradlyn et al, Nature, 2017, 547, 298 (DOI: 10.1038/nature23268)

5 GA Fiete, Nature, 2017, 547, 287 (DOI: doi:10.1038/547287a)

No comments yet