Huge datasets will become a big distraction if we neglect scientific methods

Gaze down upon that great endeavour that we call science and what does one see? At one scale, it appears homogenous; scientists are a united whole, conducting experiments, collecting and interpreting data. But a closer look reveals complexity: many tribes of scientists, each with its own territory, and differing customs and practices, particularly when it comes to the use of theory.

These differences are becoming increasingly important with the growth of big data approaches in research, such as Microsoft’s bid to ‘solve’ cancer with computer science and the $3 billion Chan Zuckerberg Initiative, which emphasises ‘transformational technologies’ such as machine learning.

The ways that physical and biological sciences have responded to the opportunities of big data reflect how these groups lean towards different philosophies.

The former tend to follow Karl Popper, who in the 20th century gave us Popperianism: propose a theory, then test it with data. In large parts of biology and medicine, the emphasis is more on an approach that dates back to the 16th century father of empiricism, Francis Bacon: gathering data from experimental observations, then devising post hoc explanations.

However, with Ed Dougherty of Texas A&M, we point out in the Philosophical Transactions of the Royal Society A that, with the rise of big data, biological and medical sciences are increasingly becoming pre-Baconian, because even four centuries ago, Bacon also valued concepts. Yet today’s advocates of big data in biology hope that acquiring large enough datasets will provide answers without the need to construct an underlying theory.

In the complex world of biosciences, that is a seductive promise: an easy way to crank out inferences of future behaviour based on past observations. Who needs theory if science can be boiled down to blind data gathering and look-up tables of data and outcomes?

Leaving aside issues that are critical for successful experiments – whether the right data of sufficient quality have been gathered – the proliferation of genomes, proteomes and transcriptomes can make it harder to find a significant signal in a thicket of false correlations. That is one reason why the impact of the human genome programme has been relatively disappointing.

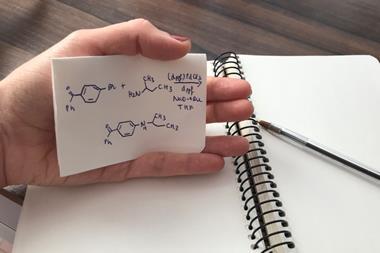

Chemistry can provide a unique perspective on this problem. Like its peers in biology, the Materials Genome Initiative is also seeking answers by amassing more data. Once again, many correlations in all these data are likely to be false leads. To tackle this problem, a team at Los Alamos in New Mexico blends elements of big data and bold theory, making use of so-called Bayesian methods to infer unknown mechanistic parameters from a wealth of experimental data using supercomputers.1

There are other productive cocktails of Bacon and Popper in chemistry. Working with James Suter and Derek Groen at University College London, one of us (PVC) has demonstrated it is possible to calculate the properties of nanocomposite materials from the bottom up, using supercomputers to extrapolate from the quantum domain to slabs measuring microns across. This virtual laboratory has many applications when it comes to developing high performance nanocomposites for cars and aircraft, for example.2

Chemists can also show the way forward when it comes to the quest for personalised medicine. At the turn of this century many researchers hoped that one could prescribe a drug based on knowledge of a patient’s genome. But while the Baconian, statistical, view is fine for a population of thousands of patients, it breaks down at the level of an individual.

Today personalised medicine has been downgraded to precision medicine: study how genetically similar people react, then assume that another person in this population will respond similarly. Because everyone is different, the only way to deliver the original vision of using genetic information to predict how an individual will respond to a drug is by Popperian modelling, which is now becoming possible to do reliably, for instance when using the sequence of the HIV virus to guide treatment.3

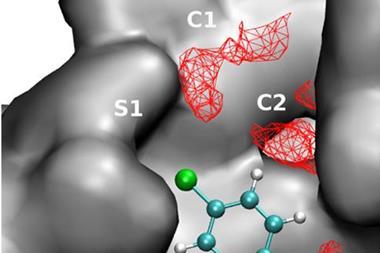

Earlier this year the UCL team monopolised the 6.8 Petaflop SuperMUC – run by the Leibniz Supercomputing Centre near Munich - for 36 hours to study how 100 drugs bind with protein targets in the body. This work suggests it is possible to design personalised treatments in the time between diagnosis and selecting an off-the-shelf medicine.

Today a new EU CompBioMed Centre of Excellence will be launched to pursue this approach which, if successful, will mark an important advance for truly personalised medicine. Yes, science will thrive on big data. But we need understanding too: biology needs more big theory.

Peter Coveney is professor of chemistry at University College London and Roger Highfield is Director of external affairs at the science museum group

References

1 Information science for materials discovery and design, eds T Lookman, F J Alexander and K Rajan, 2015, Springer; D Xue, P V Balachandran, J Hogden, J Theiler, D Xue, T Lookman, Nat. Commun., 2016, 7, 1124. (DOI:10.1038/ncomms11241)

2 J L Suter, D Groen and P V Coveney, 2015, Adv. Mater., 27, 966. (DOI:10.1002/adma.201403361); J L Suter, D Groen, P V Coveney, 2015, Nano Lett., 15, 8108 (DOI:10.1021/acs.nanolett.5b03547)

3 P V Coveney, S Wan, Phys. Chem. Chem. Phys., 2016 DOI:10.1039/c6cp02349e; D Wright, B Hall, O Kenway, S Jha and P V Coveney, J. Chem Theory Comput., 2014, 10, 1228 (DOI: 10.1021/ct4007037)

No comments yet