State-of-the-art design for computer language processing results in improved models for predicting chemistry

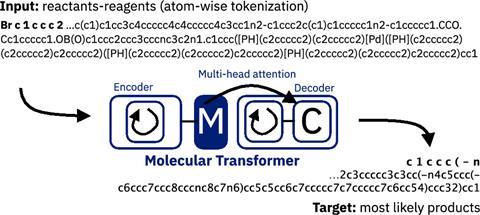

A program for predicting reaction outcomes and retrosynthetic steps has been developed using a cutting-edge approach for translating languages. Named Molecular Transformer, the software uses a new type of neural network that is easier to train and more accurate than the ones that powered earlier translation-based approaches to chemistry.

‘Thinking about chemistry in terms of language has been around for some time,’ says Alpha Lee from the University of Cambridge, UK, who led the research. Molecules can be represented unambiguously in text using Iupac terminology or Smiles strings, for example. This has led to computer models that treat the conversion of reactants to products as a translation between their Smiles representations, building on advances in computer natural language processing. ‘The fact that there is an underlying language in chemistry makes these methodologies very appealing and effective,’ says Teodoro Laino, a researcher on the project at IBM’s Zurich Research Laboratory in Switzerland. ‘Molecular Transformer basically just learns the correlation between the reactant, reagent and the product, just like machine translation, and that very flexible view of chemistry allows the machine to actually learn a lot more from the data,’ explains Lee.

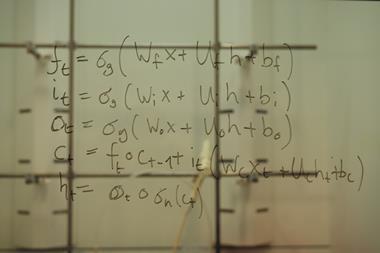

Molecular Transformer is built around a neural network with a transformer architecture, a new design published by Google researchers in 2017 that immediately shot to prominence in machine translation. The internal structure of previous neural networks meant that well-separated parts of the input had a relatively weak influence on each other’s meaning, which doesn’t reflect the properties of human language or of molecules. ‘When you compress a molecule which lives in 3D in to a 1D string, then atoms that are far apart on this string could actually be close together in 3D,’ says Lee. ‘That immediately motivated us to think about mechanisms or architectures in machine learning that allow us to capture these very long range interactions and correlations.’ Neural networks based on the transformer architecture make heavy use of a mechanism called attention, which lets them learn which parts of the input are relevant to each part of the output regardless of their positions. This reduces the amount of training needed and improves the resulting language models’ accuracy.

The study’s results prove that these benefits also apply to chemical reactions. In the researchers’ tests with data published in US patents, Molecular Transformer outperformed other language-based approaches, predicting the correct reaction outcome over 90% of the time.1 The model was also trained to predict retrosynthesis steps, and correctly found the published disconnection over 43% of the time.2 As a demonstration of the model’s flexibility, a version was trained with data from lab books from collaborators at Pfizer. The program correctly predicted reaction outcomes 97% of the time, and the expected retrosynthesis step 91% of the time, albeit on a more focused set of reactions. ‘Attention has been shown to produce great improvements in language models,’ notes machine learning and organic chemistry researcher Gabriel dos Passos Gomes of the University of Toronto, Canada. As a result, ‘their performance does not come as a surprise’, but he notes the researchers’ claim that the model seems to have learned to predict stereochemistry. ‘If true, it is a very nice achievement.’

A robot companion for chemistry

Laino urges caution in interpreting benchmark numbers, particularly those around retrosynthesis and commercial data. (Laino was not involved in those aspects of the research.) More rigid prediction models may perform better overall, and recently-developed transformer models from other groups were not included in the researchers’ comparisons. It’s also hard to judge a success rate for retrosynthesis predictions. ‘Sometimes the model predicts the wrong anion in reagents, but everything is right, and, moreover, from a chemist’s point of view, the overall reaction is ok,’ notes Pavel Karpov, who is also investigating transformer models for retrosynthesis at the Helmholtz Zentrum Munich, Germany. Laino says that the real proof of these models will be their incorporation in overall strategies and effectively linking retrosynthetic steps together, which Molecular Transformer users must currently do manually. ‘In order for a technology to be disruptive, it must be easily usable and easily accessible by the end-users, ie organic chemists.’

Thinking about chemistry in terms of language has been around for some time

The researchers who spoke to Chemistry World say that tools like this will augment, rather than replace, human scientists. ‘It is too early for me to tell if this is going to have a major impact on the industrial scale, but I can certainly see this – and IBM RXN – being using routinely at the lab,’ says Gomes, adding that ‘as an undergraduate student, I would have loved to have a robot companion that could check if my reactions were correct or at least made any sense’. ‘Even a well-educated and experienced chemist cannot know every reaction that has been carried in the world,’ Karpov points out. ‘The machine could automatically analyse it, save it, and prepare it for future use. So when a new request for a synthesis with similar functional groups and their arrangements comes, the model can remember that case and will trace back the particular experiment.’

Lee sees Molecular Transformer as a kind of ‘GPS for chemists’ that can quickly test ideas. ‘It can tell you whether a reaction is a go or a no go, and it can tell you given a particular target molecule you want to access, what is the best way to get there.’ ‘A lot of promising molecules that have never been made before can be suggested by generative models and yet making those molecules can still be a challenge; Molecular Transformer aims to close this big gap in the literature.’

Lee hopes to extend Molecular Transformer to consider reaction conditions, something language-based approaches are uniquely suited to. ‘It’s a very versatile framework, because it’s a sequence to sequence model and a lot of chemical information can be condensed in to this format,’ he says. ‘Which means we can retrain Molecular Transformer to do these different tasks almost without much hassle’. Molecular Transformer has been made available as open-source software; its model for forward reaction prediction currently powers the free web application IBM RXN for Chemistry.

References

1. P Schwaller et al, ACS Cent. Sci., 2019, 5, 1572 (DOI: 10.1021/acscentsci.9b00576)

2. A A Lee et al, Chem. Commun., 2019, DOI: 10.1039/c9cc05122h

No comments yet