An efficient new computer brain can provide quick answers to computational chemistry problems

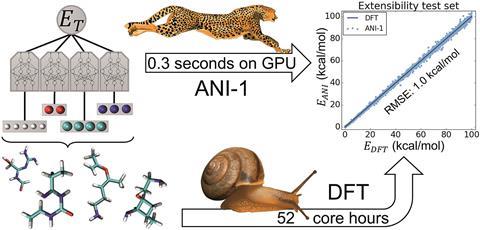

A computer that has been taught about organic chemistry can describe the forces in molecules as accurately as density functional theory (DFT), but hundreds of thousands of times faster. This combination of speed and accuracy could allow researchers to tackle problems that were previously impossible.

Chemists hoping to use computer simulations face a dilemma. Researchers commonly need to know the energy of a molecule, and the forces that control how it twists and bends. Accurate methods like DFT, which use quantum mechanics, take the most computer power and time. Approximations such as semi-empirical methods give faster but less reliable results. Although there is a spectrum of options, most techniques ask researchers to trade off speed and accuracy.

Graduate student Justin Smith at the University of Florida, US, has taken a different tack with the ‘Accurate NeurAl networK engINe for Molecular Energies’. Anakin-me, or Ani for short, isn’t programmed to know chemistry or physics. Instead, it’s shown a set of chemical structures and the results of DFT calculations, and devises connections from one to the other. ‘It doesn’t learn chemistry in the sense that we usually think about it, it learns that there are some underlying patterns in the data that allow you to do this,’ notes Adrian Roitberg, Smith’s supervisor.

The upside is that Ani is much quicker. ‘Practically, coding up quantum mechanics software takes a much shorter time than it takes to run the calculations,’ explains Garrett Goh, a researcher in machine learning at the Pacific Northwest National Laboratory, US, who was not involved in the project. ‘In contrast, the bottleneck in neural networks is training the model. Once that is done, compute is fast,’ says Goh. This speed boost might allow chemists to screen huge numbers of compounds, or study bigger molecules. Goh notes that unlike other neural networks, Ani’s chemical know-how extends to larger molecules than the ones it was trained with.

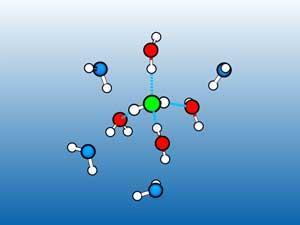

Ani is still in training, so it only knows about carbon, hydrogen, oxygen and nitrogen. Its inventors are now teaching it about other elements, and Ani’s experience with organic chemistry makes that process easier. ‘To have this network learn about new things is much cheaper than the first time around,’ says Roitberg. In the future, Anakin-me might even train itself, identifying the kinds of molecules it’s not good at and finding examples in online databases.

The team are optimistic about the future for neural network methods, with applications that range from drug discovery to inventing new materials. ‘They’re going to absolutely disrupt the way we practice computational chemistry,’ predicts Olexandr Isayev of the University of North Carolina, US, who co-directed the project with Roitberg. ‘I think it’s going to be an exciting time.’

References

This article is open access

J S Smith, O Isayev and A E Roitberg, Chem. Sci., 2017, DOI: 10.1039/c6sc05720a

No comments yet