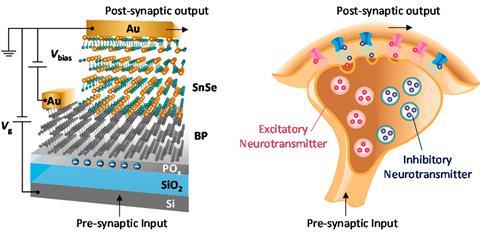

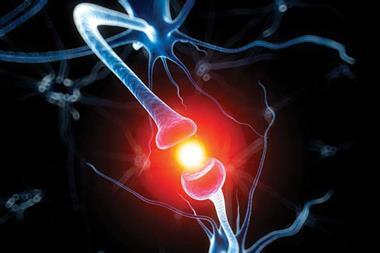

An artificial synapse that can switch between two types of signals similar to its biological counterpart has been built by researchers in the US. Artificial synapses could revolutionise neural network computing by mimicking the way the brain works.

Some synapses, which are the links between neighbouring neurons in the brain, can release two types of neurotransmitters: excitatory and inhibitory. An excitatory signal makes a neuron more likely to fire, whereas an inhibitory signal counteracts a neuron’s activity. ‘You can think of it as an accelerator and brake in a car. Having both is fundamental to a robust and stable neural network,’ explains Han Wang from the University of Southern California who led the study together with Jing Guo from the University of Florida.

Most artificial synapses produce only one type of signal. The few devices that can switch between an excitatory and inhibitory response require an additional electrode, explains Wang, which makes them bulky and difficult to scale up. The device built by Guo, Wang and their team requires no such modulating electrode. Instead, it can be configured to provoke an excitatory and inhibitory output by simply switching the input voltage. According to Wang this means it mimics a biological synapse more closely while other devices resemble hetero-synapses, a different type of setup in which three neurons work in conjunction.

Key to the artificial synapse’s function is a layered semiconductor structure, tin selenide on top of black phosphorus. ‘Their properties allow us to tune its electrical characteristics to reconfigure the synapse,’ explains Wang.

Devices like these could one day replace transistors in artificial neural networks. ‘The problem [with existing neural networks] is that they need huge amounts of data and a lot of computational power, which is translated into energy consumption,’ says Giacomo Indiveri from the Institute of Neuroinformatics in Zürich, Switzerland. ‘Dedicated hardware implementations for neural networks are promising because they could reduce power consumption and could speed up the learning process.’

However, Themis Prodromakis, a professor of nanoelectronics at the University of Southampton, is worried about the new device’s high power consumption – it needs about 20V whereas other technologies use less than 1V. ‘For this work to become more competitive, it will need to show further improvements in power savings when compared to other competing technologies. This is particularly important when one considers large scale implementation of neural networks employing ensembles of such devices,’ Prodromakis says. Indiveri agrees: ‘The voltage signals that are used in this device go up to tens of volts, which would make it hard to use it together with standard technology.’

References

H Tian et al, ACS Nano, 2017, DOI: 10.1021/acsnano.7b03033

No comments yet