A provable positive effect doesn’t necessarily translate into a viable drug

US pharmaceutical company Merck & Co recently released some clinical trial results that surprised many people, including me. Merck has been developing anacetrapib – an inhibitor of cholesteryl ester transfer protein (CETP) – for many years now, as a potential treatment for cardiovascular disease.

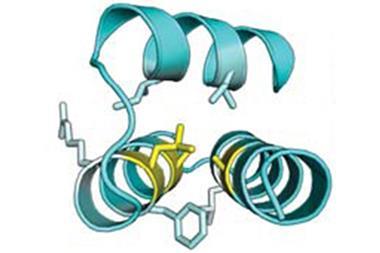

Without going into too many details, which in this area are never in short supply, CETP shuttles cholesteryl esters and triglycerides between different lipoprotein fractions, and it’s been considered a drug target since at least the early 1990s. A CETP inhibitor should not only lower the so-called ‘bad’ cholesterol (low-density lipoprotein, LDL) but significantly raise the ‘good’ cholesterol (high-density lipoprotein, HDL) at the same time. By the usual way of thinking about heart disease, this should be an excellent combination of effects, leading to less atherosclerosis, fewer heart attacks, and so on.

Several organisations have tried to develop CETP inhibitors, and every one of them has come to grief in clinical trials, usually at huge expense

But things aren’t that simple (which is a phrase that would make an uninspiring but completely truthful motto for a drug company). Several organisations have tried to develop CETP inhibitors, and although they have all had the desired effects on lipoproteins, every single one of them has come to grief in clinical trials, usually at huge expense. The compounds have either done nothing for cardiovascular outcomes or even caused slight but provable harm – certainly not what anyone was expecting. Merck is one of the last companies still hoping for a useful drug in the area, well after many competitors have staggered away wishing that they’d never heard of CETP in the first place.

The surprise is that anacetrapib actually met its primary endpoint, statistically reducing major coronary events. That’s a first, although if 20 years ago you’d told researchers in the field that it would take until 2017 to demonstrate this they would have been horrified. What is still unknown, for now, is whether this compound will ever be a marketed drug. Merck is still considering whether or not to file for regulatory approval, which made statistically alert observers immediately think of the phrase ‘effect size’.

Merck’s trial had 30,000 patients, which should allow them to see statistical significance, even for quite small effects

Anyone trying to understand clinical trial results should have that one ready at all times. Effect size is technically the difference between the means of the two study groups (treatment and control) divided by the standard deviation. In practice, that makes it a measure of how strong the effect really is; it’s a standardised and corrected measure of the difference between the groups. Merck’s anacetrapib trial had 30,000 patients: a massive and expensive effort that should allow them to see statistical significance, even for quite small effects. This is a big part of what people mean when they talk about whether a trial is sufficiently ‘powered’ to pick up a given effect: a trial with just a few participants will only be able to reach significance for things with large effect sizes, and so on. The question is, did Merck see a statistically real outcome that is nonetheless too small to be useful? We won’t know until this autumn, at the earliest.

This story illustrates several important features of drug research, many of them not all that comforting. First, what seem to be solid hypotheses are still very much open to being disproven, because in so many cases we don’t understand the biology of human disease well enough. In this example, there are clearly things about human lipidology that we haven’t grasped! Second, many therapeutic areas need great reserves of fortitude, patience, and (not least) cash in order for a drug to succeed. I would not like to estimate how much money has gone into CETP drug research across the industry, but it’s substantial. And finally, it’s possible to succeed and still fail, ending up with a treatment that does not make enough of a difference to make it worthwhile developing. Annoyingly, you often have to spend most of the money involved to find that out. There is no substitute for real data from real patients, and they only get involved in the last and most expensive stages of the process. Knowledge rarely comes easily in any area, and especially not in this business.

No comments yet