AI and machine learning are useful and powerful, but they need high quality data inputs that aren’t available yet for drug discovery

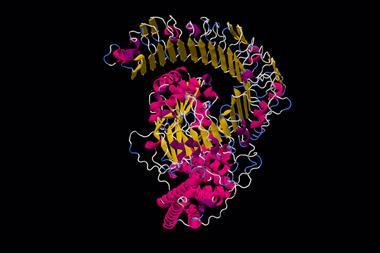

We’ve had a good amount of recent news about artificial intelligence (AI) and machine learning (ML) in chemistry (and biology), and the pace is not slowing down. Late in 2020, the Deepmind–AlphaFold team made headlines with a huge improvement in the prediction of protein structures, followed up quickly by the RosettaFold team from the University of Washington, US. Now both groups have announced similarly impressive progress in predicting protein–protein interactions and the structures of the resulting complexes, problems seen by many as the logical next (and harder) steps in the field.

If you were to use the time machine of your choice to communicate all this to researchers back in the 1970s, they would probably assume that here in the early 2020s we’ve learned an awful lot about the energetics of protein folding, hydrogen bonds, water–molecule interactions, and about balancing entropic and enthalpic energy contributions from first principles. Now, we do know more about those things than we did forty or fifty years ago, to be sure, but here’s the strange part: we still don’t know enough about them to use them as the basis for the kinds of eerily accurate protein structure predictions we have now.

A crucial ingredient for all this is that massive pile of high-quality data

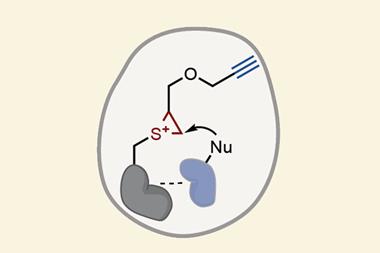

Where do they come from, then? What we’re seeing is more of a triumph of pattern matching and database wrangling. By now we have amassed a huge trove of experimental data on protein structure, via x-ray diffraction, NMR, and (more recently) through cryo-electron microscopy. This gives us the chance (aided by some ingenious and well-honed algorithms) to pick out a variety of structural motifs and their associated amino acid sequences, which lets large parts of protein structural space be filled in by analogy to structures we’ve already determined.

A crucial ingredient for all this is that massive pile of high-quality data. The techniques used to sort through it are terrific. But without enough ground truths about protein structure, no algorithms would be able to get enough traction on the problem. That illustrates an important fact about information, one that might seem trivial, but which is becoming more interesting all the time: you cannot get more out of data than was present to start with. This can be stated more formally with reference to things like Shannon entropy and algorithmic compressibility, but in general there’s a conservation law at work similar to those for energy and matter.

The classic ‘garbage in, garbage out’ law of computing is never more applicable than it is in machine learning

The set of protein data is large, rich, and detailed enough that one can extract from it useful predictions about the structures of proteins that have never even been thought of before. So if you want to see where the next amazing AI results might come from, then look for other data sets with enough gold in them to be profitably mined. Machine learning techniques do not create that gold; they uncover it and work out how the richest seams of it are connected. Putting together such databases is, as they say, non-trivial. You need numbers that you’re sure of (naturally), covering a large amount of space relative to your problem, and formatted in such a way to give the software the quickest and most useful approaches to finding all those hidden connections. Without clean, well-structured data, you and your algorithms are going to have a very unpleasant time of it. The classic ‘garbage in, garbage out’ law of computing is never more applicable than it is in machine learning.

For proteins, you might think that a powerful next step would be predicting new drug targets and disease pathways. But this is going to be a much harder job than structure prediction (which has certainly been hard enough until now). There simply isn’t a well-curated dataset of the sort of knowledge needed for that job, and the knowledge we do have is full of gaps. To make things more complicated, some of those gaps are obvious, but some are, as yet, invisible. They’ll only become clear as we learn even more about cell biology and living organisms as a whole. These will be the things that researchers 50 years from now will look back on us with pity about. ‘Those poor people!’ they’ll say. ‘They didn’t even realise X or know about Y, and no one had even thought of Z! No wonder they had such a hard time!’

And do you know who will find those things out? Not our AI and ML systems, although I’m sure they’ll help whenever possible. No, it’s going to be us. Just like it always has been.

No comments yet