Despite criticism, some universities still use the metric as a proxy for research quality

When it comes to judging scientists’ success, research-intensive universities seem to cling to journal impact factors. An analysis of 129 North American universities’ assessment guidelines has found that almost a quarter equate impact factor with research quality and significance. This is despite the metric having been criticised as unsuitable for evaluating individuals’ work.

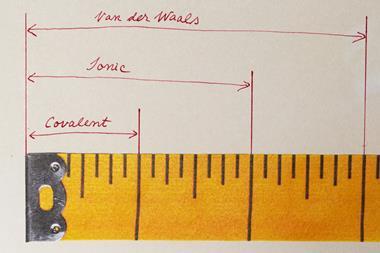

The impact factor – a measure reflecting the annual average number of citations to articles published in a journal – was designed in the 1960s to help libraries decide which journals to purchase. However, in the late 1990s, the metric started to be used as a proxy for quality and importance of individual studies – a potential misuse that has since attracted a large amount of criticism.

The most prominent project opposing the use of journal metrics as a way to measure research quality and impact is the San Francisco Declaration on Research Assessment. To date, it has been signed by more than 14,000 individuals and 1300 organisations, including all seven UK research councils.

Now, a preprint study has for the first time analysed how US and Canadian universities use impact factor in their review, promotion and tenure guidelines.

Out of 865 documents from 129 universities, only 23% were found to explicitly mention impact factor. However, among the institutions that refer to it, almost 90% use it to measure quality and importance of individuals’ research. Only a few universities caution against or discourage the metric’s use, the analysis concludes.

Impact factors are most used at research-focused universities – in 40% of cases – but less so at institutions that predominantly grant master’s or undergraduate degrees. Around 30% of life science and physical science departments back impact factors, while only 20% of social science and humanities or multidisciplinary units do so.

Moreover, many assessment guidelines were found referring to ‘top tier’ or ‘high-ranking’ journals without specific mention of impact factors. The study’s authors now hope to find out whether review committee members translate these to specific metrics when assessing faculty members.

References

E C McKiernan et al, PeerJ Preprints, 2019, 7, e27638v2 (DOI: 10.7287/peerj.preprints.27638v2)

2 readers' comments