The algorithm may have learnt about energy landscapes, but it needs a little help to find the global minimum

The success of AlphaFold for predicting the structures of more than 200 million proteins, announced last August, led to excited claims that the algorithm will revolutionise biology, drug discovery and molecular medicine. That remains to be seen, but some were keen to temper the hype by pointing out that AlphaFold had not in fact ‘solved the protein-folding problem’. Rather, it had sidestepped the question by using machine learning to find associations between sequence and known structures that it then generalised to unknown structures.

Unlike, say, a molecular dynamics simulation, AlphaFold – devised by a team at DeepMind, an offshoot of Google – doesn’t attempt to recapitulate the molecular pathway leading to the folded structure. It just uses the correlations it has learnt between sequence and chain shape. To identify these for an arbitrary primary amino-acid sequence, however, the algorithm needs to compile collections of sequences that are closely related to the target sequence, called multiple sequence alignments (MSAs). These give the algorithm a sense of what the consequences are of amino-acid substitutions in this part of configuration space. This requirement for MSAs is a problem if there are few known proteins that are close homologues to the target.

How good these predictions are is still debated. A new preprint reports that even for structures that AlphaFold predicts with high confidence, there can be significant discrepancies of detail with the experimental data.1 The predictions, the researchers say, should be regarded not as alternatives to experimental structure determination but, rather, as hypotheses that experiments can test.

Still, the predictions are mostly impressively accurate and surely useful for explorations of protein structure and function. Do they, though, tell us anything about protein folding itself?

Ground control

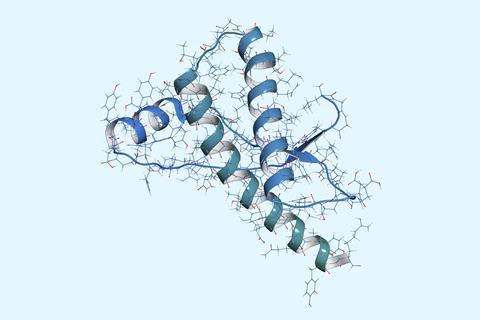

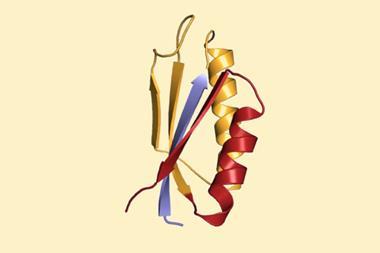

The idea that the lowest-energy folded state of a protein is uniquely encoded in its primary amino-acid sequence was put forward in 1973 by Christian Anfinsen.2 How else could a protein correctly and reliably fold once it has been translated on the ribosome, or refold after being denatured? In the canonical picture today, folding happens on a potential-energy landscape with a funnel-like topography, ensuring that the process is channelled towards the ground state more or less regardless of what the initial configuration is and does not get stuck in sub-optimal metastable conformations.

Does AlphaFold tell us anything about this energy landscape? That isn’t obvious – but James Roney and Sergey Ovchinnikov of Harvard University have now argued that it does.3 They propose that the algorithm has implicitly learnt what the energy landscape looks like but that, because it is so vast, it can’t navigate its way to the global energy minimum – the most stable fold – from any arbitrary starting point. That is why the MSAs are needed: to start the optimisation process in the right vicinity. (‘Energy’ here doesn’t necessarily correspond to the thermodynamic free energy, but rather, to some function that AlphaFold optimises.)

In support of this picture, Roney and Ovchinnikov show that AlphaFold can deduce how well the sequence fits candidate structures offered to it ‘by hand’. The researchers use a database of protein structures developed using a classical structure-prediction software package called Rosetta.4 Rosetta does have an explicit energy function, based on intermolecular forces, solvation, hydrogen bonding and so forth, and performs quite well for small proteins. By feeding AlphaFold a structure optimised by Rosetta along with many ‘decoy’ structures covering a wide range of structure space, the researchers found that the confidence the algorithm assigned to each of those structures was correlated with their genuine quality in terms of how well they minimise the energy function.

They used a similar set of targets and decoys developed for the 14th Critical Assessment of Structure Prediction challenge in 2020, a biennial ‘tournament’ in which protein-structure prediction methods are pitted against one another. Here too AlphaFold needed the decoys – in effect, acting as MSAs – to get its bearings for generating good structure predictions for the CASP14 targets.

That looks now to be less of a limitation, though. Given that AlphaFold does seem to get the feel of local energy surfaces, it may not be necessary to gather MSAs from real proteins to help it navigate to the global minimum. A method like Rosetta that can generate sufficiently plausible structures from scratch for a given sequence might be enough for AlphaFold to identify the best of them and improve on it. This is particularly good news for using the algorithm to design entirely new proteins. This AI, then, is even smarter than it seems: encoded in its confidence estimates is a kind of intuition of the physics.

References

1 T C Terwilliger et al, bioRxiv, 2022, DOI: 10.1101/2022.11.21.517405

2 C B Anfinsen, Science, 1973, 181, 223 (DOI: 10.1126/science.181.4096.22)

3 J P Roney and S Ovchinnikov, Phys. Rev. Lett., 2022, 129, 238101 (DOI: 10.1103/PhysRevLett.129.238101)

4 R F Alford et al, J. Chem Theory Comput., 2017, 13, 3031 (DOI: 10.1021/acs.jctc.7b00125)

No comments yet