The world’s scientific bodies have come together to tackle fraud and plagiarism, but the problem will be tough to crack

Against a backdrop of a rapid increase in misconduct cases, representatives of the world’s scientific societies and academies have banded together to produce a plan to shore up research integrity. The report, Responsible conduct in the global research enterprise, turned out by the InterAcademy Council (IAC) and the IAP, the global network of science academies, aims to provide an international consensus on ethical scientific conduct brought on by the changing face of science. The increase in data-intensive approaches, the growth in scientific output, the rise in international collaborations and science’s heightened role in public policy have all meant that research integrity is under ever greater scrutiny, the report points out, and it is essential that all researchers and institutions share common values.

So why is there the need for this report? Despite a rise in scientific misconduct cases, they remain low, with approximately one in 10,000 papers retracted because of misconduct. However, these papers can have significant knock-on effects, whether fraudulent results lead to other researchers wasting time and money while attempting to replicate the results, or plagiarised papers result in undeserving researchers receiving sought-after grants.

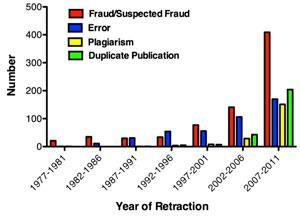

A recent paper, led by Arturo Casadevall from Albert Einstein College of Medicine in the US, has revealed that the majority of papers listed as retracted in the PubMed database of biomedical publications are due to misconduct rather than error.1 This was contrary to what had been previously thought and surprised the authors. ‘The problem was that a lot of the retraction notices are misleading,’ explains Casadevall, ‘the retraction notices are written by the authors and when there has been misconduct they don’t want to admit it, so they would write something like “the results were not reproducible”.’ So the authors used reports from several other sources, including the Office for Research Integrity, the New York Times and the blog Retraction Watch to get further details about the retractions. They found 155 papers in the PubMed database that were actually retracted because of misconduct, not because of errors or other reasons. These cases of misconduct include fraud, plagiarism and duplicate publication.

Duplicitous duplication

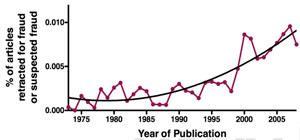

Further data mining revealed that the rate of fraud per published paper has increased from 1 in 100,000 in 1987 to 1 in 10,000 in 2007. The data also showed that the number of cases of plagiarism and duplicate publication is rising rapidly; three-quarters of all papers ever retracted for these reasons have been pulled within the past five years. However, as Casadevall suggests, this rise is at least partly due to increased detection as technological advances have improved plagiarism detection software.

Part of the problem, particularly with duplicate publication is a lack of awareness. ‘I think a lot of people don’t realise that it is a problem,’ explains Sophia Anderton, assistant manager in editorial production at the Royal Society of Chemistry (RSC). ‘At the RSC, the journals department go out and visit people all over the world and one of the things they talk about is what is acceptable and what isn’t acceptable, and it seems the message is getting through.’

Casadevall and colleagues also looked at where the retractions were coming from, by country of the corresponding author. While there are limitations to this data, the distribution is noticeable. The three largest countries associated with fraud are the US, Germany and Japan – all traditional powerhouses of scientific output – who, combined, make up two-thirds of cases. As startling as this may appear, it is broadly in keeping with their share of scientific output over the past few decades and is likely to be heavily skewed by huge fraud cases. For example, German anaesthetist Joachim Boldt has had 80 papers retracted, which account for nearly 10% of all fraudulent papers in the database. However, these countries only account for a quarter of retractions for plagiarism and duplicate publication. These forms of misconduct appear to be much more widely distributed, with China and India responsible for more than twice their share.

Publishing pressures

While the IAC and IAP report says that responsibility for conduct rests with researchers, it warns funding bodies not to ‘promote an environment where researchers face strong incentives to publish as many papers as possible in a short period of time, or face other pressures to lower the quality of research or compromise integrity’. It also advises research institutions against relying on metrics, such as journal impact factors, for decisions on hiring and promoting, as they ‘can be misleading and distort incentive systems in research in harmful ways’.

Casadevall agrees and says: ‘We judge the value of science based on where it is published, rather than what the content is. That’s causing a tremendous problem within science and scientists are responsible for it. Nobody is doing this to us – we are doing it to ourselves.’ It is then perhaps no surprise that the impact factor of a journal correlates with the number of fraud cases associated with it. ‘We are in the midst of completing a study going through all those retractions for fraud and looking at their funding sources and I can tell you that everyone is affected: the private foundations are affected, the government is affected, the institutions are affected,’ he says. ‘This is an issue of interest to anyone who funds any part of science.’

Cong Cao, a Chinese science policy researcher from the University of Nottingham, UK, explains how the system differs in China: ‘They reward the scientists based on the number of papers that they publish. That gives an incentive for those scientists to seek different routes to publish work – legally or illegally, ethically or unethically.’ With the focus on quantity of publications, we see a higher level of plagiarism and duplicate publication, as Casadevall explains: ‘[scientists] change the title and they change part of the abstract and they’re hoping to get two papers for one by sending it to a journal that is not necessarily highly visible.’

Quality control

Two years ago, Zhejiang University journal started using plagiarism software to check all submissions. They found that a third of all submissions were plagiarised. ‘Zhejiang University journal is thought to be a well-regarded journal in China. It’s supposedly high quality … so you can imagine that in second-rate, third-rate journals you would find a large percentage of papers are problematic,’ Cao says.

Part of the problem is the perceived lack of consequences. ‘Not many people who commit plagiarism or other kinds of misconduct in China have been punished,’ Cao explains. ‘You have those very bad examples in front of you, so you do the same considering that you probably won’t be punished as well.’

The IAC and IAP are aiming to maintain the integrity of research, but a set of recommendations for everyone might not do the job. ‘I think different parts of the world have to look at [the data] and see what they’re going to do with their own situation,’ Casadevall says.

No comments yet