A new large language model (LLM) named Chemma could redefine AI’s contributions to organic chemistry, offering a faster, smarter approach to reaction prediction and synthesis planning. However, some chemists remain wary, warning that scientists should not ‘unthinkingly’ become dependent on them.

Despite major advances in synthesis – from drug discovery to renewable materials – designing and building new molecules remains a slow, labour-intensive process. Molecular complexity and a vast chemical space make systematic exploration difficult. ‘Designing efficient, selective reactions [is] heavily dependent on expert intuition and trial-and-error,’ says Yanyan Xu at Shanghai Jiao Tong University. A major bottleneck is the need to experimentally test countless conditions to find those that work.

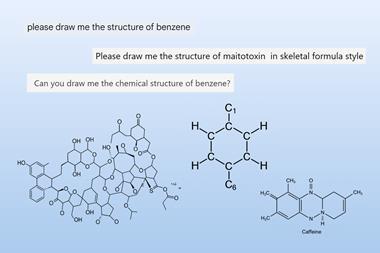

‘LLMs, like GPT-4 and Llama, have already acquired the capability for general knowledge question answering,’ explains Xu. ‘If a general-purpose LLM is fine-tuned on a vast amount of specialised chemical knowledge, [we wondered if we could speed up the synthetic process].’

Llama-led chemistry

Xu and his team fine-tuned Chemma from the open-source Llama-2-7B and trained it on more than 1.28 million ‘question-and-answer pairs’, based on publicly available chemistry datasets. These prompts were designed to teach it three key skills: predicting what a reaction will produce, figuring out how to make a target molecule via retrosynthesis and suggesting the best conditions to run a reaction.

It is also especially impressive that they have validated their work with laboratory experiments

Joshua Schrier, Fordham University

‘Chemma operates within an active learning loop – suggesting new conditions based on prior experimental results,’ says Xu. In this setup, the model learns through cycles of trial and error and then subsequent feedback from chemists who guide it to focus on the most useful experiments, helping it improve without having to test every possible option.

In a demonstration, Chemma helped identify optimal conditions for a previously unreported Suzuki–Miyaura cross-coupling reaction in just 15 experimental runs, achieving a 67% yield. ‘Traditionally, optimising a complex reaction like Suzuki–Miyaura could take hundreds of trials across weeks,’ says Xu. ‘This demonstrates a five to 10 times acceleration.’

‘It is a useful application and one of the few approaches in chemistry where they actually used a multi-stage training procedure,’ comments Kevin Jablonka at Friedrich Schiller University Jena, who was not involved in the study. ‘What makes retrosynthesis difficult in general is that for real-world applications, you need to go beyond single-step predictions – and this long-horizon planning is certainly something current models still struggle with.’

‘It is also especially impressive that they have validated their work with laboratory experiments,’ adds Joshua Schrier at Fordham University, who was not involved in the study.

Chemma outperformed existing models in key tasks, such as single-step retrosynthesis and yield prediction. ‘Most traditional modelling approaches rely on quantum-chemical calculations, like DFT, to simulate molecular energetics,’ says Xu. ‘While accurate, such methods are computationally expensive and time-consuming.’

Chemma bypasses this by learning chemical reasoning directly from data, allowing it to make instant predictions. That means lower costs and faster results, especially when tackling new problems. ‘Condition screening that would take weeks of DFT or robotic testing can be narrowed down [to] minutes,’ says Xu.

Schrier notes that the small size of the model means it could run on a modest laptop. ‘It is also worth noting that LLMs … have been subject to rapid progress over the past year, and there are much better starting points than the Llama-2-7b base model,’ he says. ‘Presumably, this means that even better-quality results are possible now.’

Chemistry LLMs face criticism

Chemma’s development comes amid criticism of LLMs, such as GPT-4, that have ‘hallucinated’ or generated incorrect results, lack chemical intuition and oversimplify complex reactions. Chemma’s success begs a bigger question: can we trust LLMs in the lab, and what do they mean for the future of human chemists?

‘I like the classic shop safety sign: “This machine has no brain, use your own”,’ says Schrier. ‘LLMs … generate a probabilistic sample of outputs. They don’t fact-check, they don’t have logic. It is up to the human user to take responsibility.’

‘A real strength of this paper is that it envisions the LLM as a tool that enables – but does not replace – a human expert,’ he adds.

A common critique, supported by some tentative research, suggests that the use of LLMs can lead scientists to skip critical thinking. Jablonka also argues that the scientific community should not become overly reliant on a single approach to conducting research.

‘We should also be aware of tendencies of centralisation of power and knowledge,’ he comments. ‘This requires that we embrace diverse ways of doing research, open research and very transparent communication.’

‘It’s clear that LLMs are here to stay, and that technologies like these are a useful tool for supplementing chemical decision making,’ says Schrier. ‘It is important that we start to teach students how to effectively use tools like these, as well as how to be critical of their outputs.’

References

Y Zhang et al, Nat. Mach. Intell., 2025, 7, 1010 (DOI: 10.1038/s42256-025-01066-y)

No comments yet