The special properties of quantum computers should make them ideal for accurately modelling chemical systems, Philip Ball discovers

‘If you want to make a simulation of nature,’ the legendary physicist Richard Feynman advised in 1981, ‘you’d better make it quantum-mechanical.’ By ‘nature’, Feynman meant ‘stuff’: the particles and atoms and molecules we’re made from. His comment came in a talk published the following year,1 and is generally regarded as the founding text of quantum computing. It now looks even more prophetic than ever.

For although we are constantly told that the unique selling point of quantum computers is their enormous speed compared with the classical devices we currently use – a speed-up that exploits the counterintuitive laws of quantum mechanics – it seems that the most immediate benefit will be the one Feynman identified in the first place: we’ll be able to simulate nature better.

In other words – and despite Feynman’s title ‘Simulating physics with computers’ – the initial value of quantum computers may well be their tremendous potential for predicting the properties of atoms and molecules: for chemistry. ‘Many in the community, as well as myself, believe that quantum chemistry and materials science will be the first useful applications of quantum computers,’ says theoretical chemist Alán Aspuru-Guzik of Harvard University in the US.

Quantum rules

Feynman’s proposal was motivated by the fact that, while nature runs according to quantum mechanics at the level of atoms and molecules, our current computational techniques can offer no more than a crude approximation of the quantum rules using classical physics.

In principle, it’s easy to write down a complete quantum description of matter. You just have to set out the Schrödinger equation for all of its constituent fundamental particles. This equation, stated by Erwin Schrödinger in 1924, lets you figure out the mathematical entity called the wavefunction from which all observable properties of the quantum system can be predicted..

The trouble is, the Schrödinger equation is impossible for us to solve for all but the very simplest of systems. You can do it exactly for the single electron in a hydrogen atom: you input the potential-energy function created by the electrostatic attraction of the electron to the atomic nucleus, and use the resulting wavefunction to calculate the allowed electron energy levels, spatial distributions and so on.

But once you try to do that for objects with more than one electron, you’re stuck. Two electrons repel one another, and so you can’t figure out the potential experienced by one until you know the spatial distribution of the other. The usual solution is to approximate: to pretend that the wavefunction of a two-electron system such as a helium atom or hydrogen molecule can be approximated as a product of two one-electron wavefunctions. This approximation of factorizing the wavefunction gets ever cruder as the object has more electrons. Alternatively, you can crunch out a wavefunction by trial and error: making a guess at what it might be and then gradually improving the guess by iterating the Schrödinger equation until you get to a ‘self-consistent’ solution that doesn’t change significantly on subsequent iterations. This approach of finding a self-consistent numerical solution for the wavefunction dates back to the famous Hartree–Fock method of quantum chemistry in the 1930s.

The trouble is, the Schrödinger equation is impossible to solve for all but the very simplest of systems

It might not be ideal, but there is now a veritable industry devoted to finding good, efficient algorithms for calculating approximate solutions to the Schrödinger equation. Quantum-chemical methods can often supply predictions of the properties of molecules – such as bond energies and vibration frequencies – with great accuracy. But the more accurate you want to be, the more costly the computation. That’s why it has been feasible to use fully (albeit still approximate) quantum-mechanical calculations only for rather small chemical systems: molecules with a few atoms. If you want to simulate an entire protein, you need cruder methods such as molecular dynamics, which are based on Newtonian mechanics and at best just build in quantum effects in a rough, heuristic manner.

The problem is that the Schrödinger equation invokes quantum rules, whereas modern computers use classical ones. True, quantum mechanics governs the behaviour of the tiny electronic circuitry in computer components such as ultra-miniature transistors. But in the end, these devices encode information classically in binary form: as bit strings of 1s and 0s.

The quantum computer envisaged by Feynman is different. Its memory and logic elements work according to quantum rules. In particular, a quantum bit (qubit) need not just take the values 1 and 0, but could exist in a quantum superposition of the two states. Superpositions are allowed by the Schrödinger equation: they consist of linear combinations of the wavefunctions of allowed quantum states. It’s common to say that superpositions are in ‘two (or more) states at once’, although more strictly we should say that a quantum system in a superposition of states can be found in any of the superposed states when a measurement is made on it.

In short, because qubits are themselves described by quantum wavefunctions, they can be used to represent – to simulate – quantum objects like electrons. You no longer need to make simplifying approximations: a system of qubits can represent the electrons in a complex molecule exactly, and so a simulation on a quantum computer can supply exact predictions without a big computational overhead.

At least, that’s the idea. But in order for it to work, you have to sustain the mutual quantum states of the qubits: to keep their wavefunctions coherent. Typically, this means placing the qubits in a so-called entangled state, where their wavefunctions evolve in an interdependent way. Entanglement isn’t essential for quantum computing, but one way or another the qubits must be kept coherent. That’s really hard, because coherence is difficult to sustain in a quantum system. If it interacts with its surroundings, the coherence of a collection of qubits ‘leaks out’ into the environment and can no longer be tracked just within the qubits themselves. This process, called decoherence, is what quantum engineers must overcome to make quantum computers that work.

So far it’s only proved possible to sustain coherence among a dozen or so qubits for the times (typically milliseconds) needed to carry out a quantum computation. More than that, and decoherence rapidly sets in: it’s like trying to juggle with too many balls. Most prototype quantum computers today use qubits called superconducting quantum interference devices (Squids), which are rings of superconducting material in which the states of the electrons are described by a single wavefunction. But some researchers are exploring other methods, such as encoding quantum information in the electronic states of atoms or ions suspended in electromagnetic traps, or in spins (quantum objects that have a magnetic orientation a bit like a bar magnet) in crystalline materials such as silicon and diamond. Some think that chemistry might itself be used to furnish the ideal spin-based qubits.2

Simulating nature

When Feynman’s challenge was picked up in 1985 by the theoretical physicist David Deutsch at the University of Oxford, UK, it was with a view to boosting the power of computers. Deutsch’s idea was that a collection of coherent qubits in a collective superposition could encode many more states – many more potential answers to a computational problem – than an equivalent array of classical bits that had to be just in states 1 or 0. In effect, then, said Deutsch, a quantum computer would carry out many calculations in parallel to reach a solution more quickly. A firm believer in the many worlds interpretation of quantum mechanics (which assigns a physical reality to all possible solutions of the Schrödinger equation), Deutsch argued that the quantum computer would be carrying out separate calculations simultaneously in different worlds, before converging on the right answer.

Not many quantum-computation theorists see it that way. But it’s fair to say that in general a quantum computer is able to find answers to a problem without the ‘trial and error’ of having to laboriously try out each possible solution. It takes quantum short-cuts.

A quantum computer is able to find answers to a problem without trial and error

After Deutsch’s proposal, it took several years before anyone figured out a way of getting a hypothetical quantum computer to do anything useful. In short, to do a computation you need an algorithm: a series of steps that will lead from some input data to the desired answer. In 1994 Peter Shor of the Massachusetts Institute of Technology in the US described a quantum algorithm that could be used to factor numbers quickly on a quantum computer – a task that becomes rapidly intractable for very large numbers on classical computers.

Much of the subsequent theoretical work on quantum-computer algorithms focused on finding other calculations that these devices might do quicker: it was all about speed. The usual promise now made in popular accounts of quantum computers is that they will be much faster than your laptop. This is misleading. Quantum computers might do some tasks faster, but it’s not guaranteed that they’ll outperform classical devices for all tasks – and they might not do some well at all. Indeed, it’s been challenging to actually find many algorithms for which quantum computers have a definite advantage.

Amidst all of this, Feynman’s original motivation of simulating the quantum nature of stuff directly got rather eclipsed. But as quantum computers start to make their debut in reality, this focus has re-emerged as the most likely initial benefit of the field.3

Little computers with big ideas

Although the principles of quantum computing are now well established, so far practical devices contain only a handful of qubits. Take IBM’s Quantum Experience, unveiled in 2016, which uses Squids to make logic-gate quantum circuits analogous to those in classical digital computers like your laptop: it has just five qubits. IBM has made Quantum Experience available for general use via a web-based platform, but for chemists there is nothing you can do with it that you couldn’t do better on a classical machine. After all, if each qubit is, loosely speaking, going to simulate an electron or an atomic nucleus, you don’t have much to play with. IBM now has two other prototype devices with 16 and 17 qubits.

One of the key problems for scaling up quantum computers is dealing with errors. These are bound to occur in any computation – a bit flips by accident (because of thermal fluctuation, say) from a 1 to a 0. In conventional computers, errors are handled by keeping copies of each bit. With three copies, say, any discrepancy (two reading 1, the third 0, say) is set right with a simple majority rule: reset the third to agree with the first two. But this redundancy of bit-accounting won’t work for quantum computers because it’s a fundamental principle of quantum mechanics that you can’t make a copy of an arbitrary quantum state. So researchers have been working on new ways of keeping errors under control and preventing them from derailing the calculation.

Many problems in chemistry are optimization or minimization problems: think, for example, of trying to predict the most stable folded state of a protein from its primary sequence of amino acids. Conventional computers have made progress in tackling the protein-folding problem, but it still stretches the capabilities of supercomputers to their limit.

Problems like this are particularly well suited to an approach called adiabatic quantum computation (AQC). Here, instead of the qubits carrying out a specified sequence of operations to find the answer, a pool of interacting qubits is coaxed gently into a ground-state configuration corresponding to the solution to the minimization problem. It’s somewhat analogous to the method of simulated annealing used for minimization problems on classical computers, but enacted with quantum rules.

This is still a dramatic advance compared to the situation five years ago

The general idea is that the pool of qubits is held in a potential that is gradually ‘shaped’ to represent the energy function (Hamiltonian) of the problem at hand, in which case the qubits encode the corresponding ground-state quantum wavefunction. ‘Adiabatic’ here simply means that the collection of qubits is able to remain in its ground state throughout the ‘shaping’ process.

This is the basis of a device made by the company D-Wave in Burnaby, Canada, which they claim is the ‘world’s first commercial quantum computer’, selling for around US$15m. Experts in the field have argued about whether D-Wave is really using quantum computation in the usual sense, but in any event there is no evidence yet that it can outperform classical computers. Besides, there are pros and cons of AQC: it is versatile and relatively simple in principle, but it’s hard to implement error-correction, which inhibits scaling-up because errors accumulate too fast.

Recently, however, researchers at Google’s research labs in Santa Barbara, US, unveiled a prototype nine-qubit device that aims for the best of both worlds, simulating the minimization capability of AQC with digital quantum circuits made from Squids, for which error correction has a well-developed theory.4 The Google device can also simulate interactions between many electrons – an essential capacity for quantum chemistry. The company now has a 22-qubit machine too. ‘These machines are tiny,’ admits physical chemist Markus Reiher of the Swiss Federal Institute of Technology (ETH) in Zurich, Switzerland. ‘But this is still a dramatic advance compared to the situation five years ago.’ It’s possible, he says, that ‘the number of qubits available might advance rapidly now that major companies are making huge investments’.

Doing chemistry the quantum way

Much of computational chemistry is about finding the ground-state energies of molecules, from which their stability and reactivity can be predicted. You can get that from the quantum wavefunction, explains computer scientist Jay Gambetta of IBM’s Yorktown Heights Research Center in the US – but that’s the very thing you can’t work out classically without a lot of approximation.

In 2010, Aspuru-Guzik, working with quantum physicist Andrew White at the University of Queensland in Australia, simulated the dihydrogen molecule using a photon-based quantum computation devised by White’s team.5 Perhaps the simplest molecule of all, dihydrogen was also the first one to be analysed using quantum mechanics in 1927 by the German scientists Walther Heitler and Fritz London. The quantum simulation was able to calculate the correct bond energy to six parts in a million.

The hydrogen molecule was also the subject of a simulation on Google’s quantum computer last year,6 in a collaboration between Aspuru-Guzik, quantum engineer John Martinis at Google, and other groups. The researchers developed a technique they call a variational quantum eigensolver, a kind of quantum version of the iterative minimization used in simulated annealing.7 While the simulation again predicted the molecule’s energy states and bond lengths well, it has the important advantage over the earlier work that Google’s approach can be scaled to larger systems without an exponential escalation in the measures needed for error correction.

That scalability issue is critical – and there’s more to it than just getting more qubits. Keeping all the qubits in a coherent quantum state isn’t the only obstacle to making a working quantum computer. But as with any computer, if you want to solve a computational problem then you need the right program: a suitable algorithm, in other words. This issue isn’t appreciated enough, says Reiher. Feynman’s proposal implied that all you really need to make a useful quantum computation is enough qubits – but it’s far from obvious that this guarantees you can get useful information out of them.

Reiher and his coworkers recently showed, however, that it should be possible. They have figured out how, in principle, to implement an algorithm on a quantum computer that could elucidate a reaction mechanism in order to calculate reaction pathways and rates.

This is one of the biggest objectives of quantum chemistry, but at present it’s hugely difficult for all but the simplest reactions. To understand the catalytic action of an organometallic complex, say, or to accurately predict the rate and stability of drug binding at the quantum level is beyond the capability of today’s computers. Can quantum computers really do better?

Efficient quantum algorithms for some calculations don’t currently exist

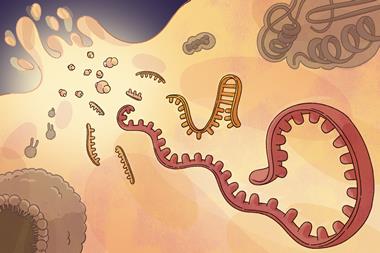

Reiher and his collaborators at Microsoft Research chose as their example the fixation of atmospheric nitrogen into ammonia by the nitrogenase enzyme.8 The full mechanism of this vital process is not yet known, although it involves binding of the nitrogen molecule to a cofactor containing iron and molybdenum (FeMoco) embedded in the enzyme’s binding site.

The key, the researchers say, is only to use quantum computing for the part of the problem for which it is currently well suited – calculation of electronic energies for a given set of molecular orbitals – and to use a classical computer for the rest. This isn’t just pragmatism; the truth is, Reiher says, that efficient quantum algorithms for doing those other parts of the simulation don’t currently exist. The strategy, then, is to use a modestly-sized quantum computer as an ‘accelerator’ linked to a classical computer.

Thus the researchers use conventional classical quantum-chemical methods to optimize the possible structure of FeMoco in the binding site, providing a Hamiltonian function that is then used to find the ground-state energy of the bound complex. From this, one can calculate the kinetics and thermodynamics of the reaction. The process can be repeated with different FeMoco structures to find the pathway with the lowest energy. ‘We are proposing to do the calculations for thousands of structures in different charge, protonation and spin states, binding not just N2 and NH3 but all possible intermediates,’ Reiher explains. ‘We have recently developed means to do this in an automated and efficient manner.’

Reiher and colleagues estimate that accurate simulations would need just a hundred or so logic-gate qubits – compared to about a thousand trillion on a classical computer. ‘If we had more than 200 logical qubits, we could do things in quantum chemistry beyond standard approaches’, he says. ‘And if we had about 5000 such qubits, then the quantum computer would be transformative and disruptive to quantum chemistry. Decades of methods development would be rendered rather unimportant, as one could just solve the full quantum problem for a moderately sized molecule.’

But there’s a catch. To make a ‘logical qubit’ that does its job reliably, you need a good method of error correction. For that, each logical qubit needs to be made up of many physical qubits. Reiher and colleagues estimate that the 100 or so logical qubits they need to crack the nitrogenase mechanism would correspond to around a few hundred thousand to a million physical qubits. That is way more than current devices are anywhere near providing. ‘It will be a few years away, since we haven’t even built a single logical qubit yet,’ says Reiher’s collaborator Matthias Troyer at Microsoft.

The problem is not quite so bad, though, because the calculations can be done in parallel – you don’t need a single million-qubit device, but just many small devices working together, or indeed one 100-qubit device working through the tasks in sequence. ‘It’s not unreasonable to think that we may have such a moderately sized machine in 5–10 years,’ Reiher says. This division of labour might be the key to doing a lot of chemistry on quantum computers, says Gambetta: to develop what computer engineers call a batching architecture for doing relatively small tasks in a systematic sequence. What’s more, he adds, canny choices in encoding the electrons’ degrees of freedom in the computer’s qubits can take advantage of certain symmetry properties that reduce the number of qubits needed.9

Only after working through the question of how to translate a particular reaction mechanism into a quantum computation, Reiher says, ‘did we convince ourselves that a quantum computer can actually be useful in computational chemistry’. The work ‘presents an upper bound on what one needs to solve a really interesting problem’, says Troyer. ‘Future improvements will bring the costs down.’

Metal–atom clusters also have the kind of electronic structures well suited to this kind of quantum simulation, Reiher adds. ‘My view is that transition-metal catalysis – homogeneous, heterogeneous, bioinorganic – will benefit.’ That could include many other important types of reaction, such as C–H bond breaking for polymerization, production of hydrogen for fuel, and fixation of carbon dioxide to mitigate global warming.

Quantum supremacy

Aspuru-Guzik thinks that the first useful quantum simulations are unlikely to be in chemistry itself, but in condensed-matter physics, where there are rather simple many-body systems that lend themselves to straightforward simulation – such as the ‘electron gas’, in which many electrons are free to move more or less independently of one another. ‘Later, the simulation of the electronic structure of molecules will be crucial,’ Aspuru-Guzik says. ‘In this class of molecules, organic materials, which are candidates for batteries, solar cells and organic electronics, are my favourite examples.’

The turning point, Aspuru-Guzik thinks, will be the moment of ‘quantum supremacy’: the first prediction or calculation that a quantum computer could do that a classical one cannot, regardless of its usefulness. He thinks this stage will be reached quite soon – perhaps in a couple of years, and maybe using Google’s quantum computer to simulate random quantum circuits and see what states it produces. Here, he says, ‘a classical computer would not be able to calculate the distribution of states in a reasonable amount of computer time’.

Some chemists are withholding judgement on the promised riches until there’s some confirmation of their reality. ‘There is nothing planned for the next few years that cannot also be done classically, despite some hype,’ says Troyer.

The turning point will be the moment of quantum supremacy

‘I would like to see evidence that quantum computing works on problems like molecular dynamics or quantum chemical calculations faster than “regular” computers, and allow one to input questions and retrieve answers,’ says Arieh Warshel of the University of Southern California, US, whose theoretical work on the multiscale computer modelling of proteins won him a share of the 2013 Nobel prize in chemistry.

And how much does progress depend on having vast computer resources anyway, Warshel wonders, rather than finding smarter ways to exploit what is already available? ‘All my advances were accomplished by finding ways to perform challenging calculations without having enormous computer power, namely by developing multilevel approaches that allow one to solve problems long before the emergence of brute force methods.’

All the same, he wouldn’t turn down the chance. ‘If I will be offered the use of a working quantum computer that can accelerate quantum-mechanical calculations by a factor of 100,’ he admits, ‘I will gladly find a use for it.’

Philip Ball is a science writer based in London, UK

References

1 R P Feynman, Int. J. Theor. Phys., 1982, 21, 467 (DOI: 10.1007/bf02650179)

2 W Wernsdorfer, Nat. Nanotechnol., 2009, 4, 145 (DOI: 10.1038/nnano.2009.21)

3 I Kassal et al, Annu. Rev. Phys. Chem., 2011, 62, 185 (DOI: 10.1146/annurev-physchem-032210-103512)

4 R Barends et al, Nature, 2016, 524, 222 (DOI: 10.1038/nature17658)

5 B P Lanyon et al, Nat. Chem., 2010, 2, 106 (DOI: 10.1038/nchem.483)

6 P J J O’Malley et al, Phys. Rev. X, 2016, 6, 031007 (DOI: 10.1103/PhysRevX.6.031007)

7 A Peruzzo et al, Nat. Commun., 2014, 5, 1 DOI: 10.1038/ncomms5213

8 M Reiher et al, Proc. Natl Acad. Sci. USA, 2017, (DOI: 10.1073/pnas.1619152114)

9 S Bravyi et al, arXiv, 2017, 1701.08213

No comments yet