UK-based researchers have fine-tuned GPT-3 to predict the electronic and functional properties of organic molecules.

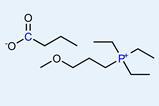

GPT-3 can recognise Smiles – Simplified Molecular Input Line Entry System – a notation that represents chemical structures as a text string, but typically returns a broad and non-expert description. For example, GPT-3 would describe the benzene Smiles string as an aromatic compound with a ring structure but cannot provide deeper insight into molecular properties.

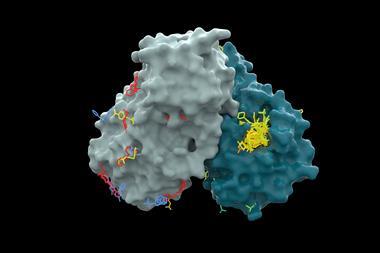

The team, led by Andrew Cooper at the University of Liverpool, alongside Linjiang Chen of the University of Birmingham, used a dataset containing 48,182 organic molecules extracted from the Cambridge Structural Database (CSD) and details on the molecules’ synthetic routes, solid state stability and electronic properties. They fine-tuned GPT-3 with the CSD data, then trained the model to predict and classify HOMO and LUMO values of organic semiconductors when given a Smiles string, greatly increasing the utility of queries.

By removing atoms and functional groups from the input Smiles string, the team demonstrated the resilience of the fine-tuned GPT-3 model towards incomplete data. Despite this missing data, properties predictions remained accurate and could correctly identify the intended Smiles. The ability of GPT-3 to predict the properties of unknowns was also shown by removing all molecules containing a tetracene fragment in both training and fine-tuning and then successfully returning the properties of these missing species.

Though GPT-3 remains resource intensive, this study highlights the potential of large language models for future integration with computational workflows as large language models become cheaper and more efficient.

References

This article is open access

Z Xie et al, Chem. Sci., 2024, 15, 500 (DOI: 10.1039/d3sc04610a)

No comments yet