Poor pre-clinical data and an absence of negative results are pushing candidate molecules into the clinic too soon

The reporting of animal studies is biased, inflating the efficacy of drug candidates and pushing them into the clinic before they are ready. This is the verdict of new research, which finds that more treatments go from pre-clinical to human trials than ought to, wasting valuable resources and potentially putting trial participants in danger.1 It adds to a growing body of evidence pointing to problems in the way animal testing is reported and managed.

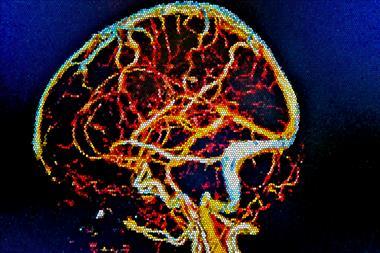

The study, led by John Ioannidis of Stanford University, US, looked at meta-analyses of pre-clinical trials – statistical combinations of multiple studies – in neurological disorders. Meta-analyses have grown in importance in recent times thanks to their ability to combine results from many small studies to create a picture of a drug’s toxicity and the strong desire to reduce animal testing. The paper found only 5% of animal studies in the literature had credible positive effects with no hint of bias. Although the dataset was confined to neurological interventions, Malcolm Macleod, one of the authors of the report, was adamant that this should not be discarded as a one-off. ‘While I wouldn’t want to claim that it applies across in vivo research … wherever we look, we have found it.’

The findings raise concerns that doomed-to-fail drugs are being allowed to proceed to clinical trials. Macleod cites the example of tirilazad, a drug trialled to treat stroke. Animal studies on the drug were promising, and it was taken forward to clinical trials. But the trials showed an increase in death and disability among patients taking the drug. ‘Tirilazad was probably harmful in humans [and] certainly didn’t do any good,’ he says. One of the reasons for this was the quality of the animal data.2 ‘When we went back to look at the animal data it wasn’t convincing … because of failure to randomise, failure to do blind testing.’

Forgotten research

The group says there are several endemic problems with the system. The first and foremost is so-called ‘publication bias’. Academic journals concerned with the impact and influence of their papers are less likely to publish negative results. So negative results are left unpublished and forgotten, and are unlikely to be included in the meta-analyses which dictate the progression of a drug to clinical trials. Wendy Jarrett, CEO of Understanding Animal Research, says that the research funding could have been wasted on duplicated studies. ‘If the data isn’t published, then people might not know that it’s already happened.’

Transparency is the zeitgeist of the moment, and it cuts across lots of sectors

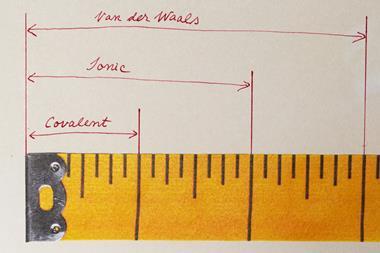

Secondly, research groups will often run several sets of statistical tests, choosing the method which gives them the most significant result. The third issue is that of small study-effects. Smaller datasets are less accurate, which is particularly pertinent in animal trials, where many individual trials are performed with very small groups of animals.

Some of these problems may be harder to fix than others, but everyone seems to agree that the process should be more transparent. Pre-registering, a practice common in clinical trials where publication is agreed regardless of the outcome, would mean negative results don’t get ignored. Multi-centre trials should help to eliminate small study effects.

Marc Avey, a researcher with the Canadian Council on Animal Care, feels a greater proportion of trials brought to the clinical stage could succeed if more negative data is published. ‘There are a lot of lessons that we’ve learned from clinical trials,’ he says. ‘People have been asking: “If we apply these methods to the pre-clinical, animal world, will we improve translation in the long run?”’

Testing times

Overcoming the inertia of the old ways of doing things may be hard, but the rewards could be huge. Macleod says that a new system of multi-centre animal trials could save millions of pounds. ‘For stroke you need a dataset of about 1000 animals before you can be confident of what the drug does.’ He proposes a system where 10 or 20 labs take part in the same trial. Information would be uploaded to a central database and then assessed ‘blind’ by one of the other centres. Such a trial would be expensive – around £2 million by Macleod’s estimate – but much cheaper than a clinical trial costing £40 million.

Jarrett shares Macleod’s optimism. She believes that bioscience is already becoming more open, as its practices grow more visible and acceptable and animal rights extremism retreats. ‘Back in the 90s there was a lot of secrecy, mainly out of fear. Now scientists feel able to be more open.’ She points to projects like figshare, an online data sharing service, as an indicator of scientists’ willingness to address the problem.

But she accepts that things need to be done now if animal testing is to be used to its full potential. ‘[Transparency is] the zeitgeist of the moment, and it cuts across lots of sectors,’ she says, referencing scandals such as LIBOR and MPs’ expenses. ‘But if we can come up with better results with fewer animals, it can only be good for science.’

No comments yet