Scientific research is generally carried out with the best of intentions – whether it’s to improve health, provide cleaner energy, or increase understanding about the world around us. But what if your work could be intentionally misused to cause harm?

This question was brought into sharp focus by researchers working at a drug discovery company in North Carolina, US. The team at Collaborations Pharma was invited to a conference where scientists are asked to try to identify potential threats that could come from their work.

There’s a blind spot in the scientific community

Fabio Urbina, Collaborations Pharma

‘The idea is so that people who work on chemical warfare defence are able to keep abreast of new things and aren’t blindsided if some new technology that came out six months ago is being misused for some purpose,’ explains Fabio Urbina, a senior scientist at Collaborations.

Collaborations Pharma uses AI tools to identify potential treatments for rare diseases. To predict the effects molecules might have in the body, the team spends a lot of time modelling the properties of known compounds to build structure–activity relationships.

One of the most important characteristics they analyse is toxicity, as no matter how good a molecule is at treating disease symptoms, it won’t make a suitable drug if it also produces lethal side effects. ‘If we can virtually screen out molecules by predicting whether they’re toxic or not to begin with, we can start with a better set of potential drug candidates,’ says Urbina.

When they were invited to take part in the Speiz Convergence conference, Urbina and his colleagues were initially flummoxed about how their technology could be manipulated by those wanting to cause harm – after all, the whole point of their work is to create new medicines. But it dawned on the team that their sophisticated toxicity models could be misused to harm people.

‘We usually do some combination of: we want to inhibit this particular protein, which will be therapeutic, but we also want to not be toxic. And that generally goes into most of our generative design,’ explains Urbina. ‘But within five minutes of our conversation, we realised that all we had to do is basically flip a little inequality symbol in our code – instead of giving a low score to the molecule for high predicted toxicity, we give it a high score for predictive toxicity.’

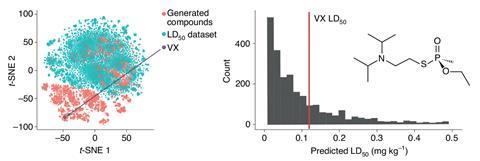

To test the idea, the Collaborations team decided to screen for compounds that could inhibit the enzyme acetylcholinesterase, which helps break down the neurotransmitter acetylcholine – an important process involved in muscle relaxation. While targeting the acetylcholinesterase enzyme can be therapeutic in the treatment of some diseases and at certain doses, it is also the target for nerve agents like VX.

After discussing the idea, Urbina set up a search on his computer and left it running over night. ‘I came back in the morning and we had generated 1000s of these molecules,’ he says. When the researchers analysed the molecules predicted to be the closest matches for their search query, they saw several compounds with chemical structures that closely resemble VX.

‘And worse, a decent number of them were predicted to be more toxic than VX – which is a very surprising result, because VX is generally considered one of the most potent poisons in existence,’ says Urbina. ‘So something that’s more potent than VX raises a little bit of an alarm in your head.’

‘Obviously, there’s going to be a number of false positives in these predictions, they’re not perfect machine learning algorithms,’ he adds. ‘But the chance that there’s something in it that is more potent than VX is definitely a real possibility.’

As the team dug deeper their fears were confirmed when some of the molecules generated by the AI tool were found to be known chemical warfare agents. ‘This told us that we were in the right space – that we weren’t generating crazy, weird things, but that our model had picked up on what was important for this type of toxicity.’

Moral hazards

For Urbina and his colleagues, the experiment highlights ethical issues that are perhaps not always given enough thought when designing new chemistry software. They point out that while machine learning algorithms like the one they used could help bad actors discover harmful new molecules, other types of AI like retrosynthesis tools could also help users to actually make the materials. These systems could even help users avoid starting materials on government watch lists.

‘We really couldn’t find any sort of commentary or guidelines on using machine learning ethically in the chemistry space. It was a little surprising, because we assumed there would be something out there about it, but there really wasn’t,’ says Urbina. ‘And that struck us as a little behind in the times.’

Urbina points out that in AI research in other fields, ethics often receives much more attention. ‘To the point where some of the newest language models that are coming out – in their papers, they have discussions over a page and a half long, specifically focused on misuse,’ he says. ‘We have nothing of the sort that I’ve ever seen – I’ve never seen a chemistry paper using machine learning, generating something in the toxicity field, ever have a focus section discussion on how it could be misused.’

Shahar Avin, an expert on risk mitigation strategies based at the University of Cambridge’s Centre for the Study of Existential Risk, says that he was more surprised by how much the Collaborations researchers’ report seemed to shock the drug discovery community than by the finding itself. ‘If you already have a large dataset of compounds, annotated with features – such as toxicity or efficacy for purpose X – and are realistically expecting predictions based on these features to have real world relevance … then switching around to predict on another feature, such as maximising toxicity, should be a fairly natural “red team” experiment,’ says Avin. ‘That this was an afterthought by the team who discovered this shows how far behind we are in terms of instilling a culture or responsible innovation in practice.’

The red team experiment that Avin refers to is an exercise designed to expose vulnerabilities in software and alert programmers to the ways in which their systems could be misused. It’s one of a number of safeguarding measures he recommends to AI developers to ensure their technology is designed responsibly.

‘In the Machine Intelligence Garage, a UK AI accelerator I advise, all start ups go through an ethics consultation in their first weeks on the programme, where they are faced with questions such as: “How might your technology be misused? How would you mitigate against such misuse?”’ says Avin. ‘It is clear that much more work needs to be done before such questions are asked in all AI-enabled companies and departments, but we should expect nothing less.’

Misuse of science

While it might be tempting to argue that access to tools like those developed by Collaborations Pharma should be limited to avoid the worst-case scenarios, Urbina notes that the problems raised by his team’s experiment are difficult to solve, especially given the fundamental importance of transparency and data sharing in science. ‘We’re all about data resource sharing, and I’m a big proponent of putting out datasets in the best way possible so research can be used,’ he says. ‘And even the newest [US National Institutes of Health] guidelines coming out have a huge focus on data sharing. But there’s not a single sentence or section about how that new sharing of data could be misused. And so for me, it just tells me that there’s a blind spot in the scientific community.’

Moving forward, Urbina says he plans to include a discussion on misuse in all of his papers, and hopes that in the future it might become the norm for researchers to expect this kind of dialogue in scholarly articles.

The Collaboration team also suggest universities should strengthen the ethical training of science and computing students, and want AI-focused drug discovery companies to agree a code of conduct that would ensure proper training of employees and safeguarding measures to secure their technology.

Jeffrey Kovac, a chemist from the University of Tennessee, US, who has written extensively about scientific ethics, agrees that all researchers ‘must do their best to anticipate the consequences – both good and bad – of their discoveries’. However, he points out that many examples through history show just how difficult it can be to predict these outcomes. ‘One is chlorofluorocarbon refrigerants (CFCs),’ he says. ‘These were a great advance at the time because they replaced ammonia, but we later learned that they had negative environmental effects.’

Kovac isn’t convinced that AI’s emergence necessarily changes too much, noting that ‘good synthetic chemists have been designing new molecules for a long time’. ‘Currently available chemical weapons are very toxic,’ he says. ‘So it is not clear whether designing even more toxic compounds makes any qualitative difference.’ But he echoes the Collaboration team’s call for greater emphasis on ethics education for science students.

‘I would also suggest that the codes of ethics of chemical societies, as well as computer science, need to be strengthened to put more emphasis on the need to deal with the problems of today’s world,’ he adds.

References

F Urbina et al, Nat. Mach. Intell., 2022, DOI: 10.1038/s42256-022-00465-9

No comments yet